Text mining in R

As data becomes increasingly available in the world today the need to organise and understand it also increases. Since 80% of data out there is in unstructured format, text mining becomes an extremely valuable practice for organisations to generate helpful insights and improve decision-making. So, I decided to experiment with some data in the programming language R with its text mining package “tm” – one of the most popular choices for text analysis in R, to see how helpful the insights drawn from the social media platform Twitter were in understanding people’s sentiment towards the US elections in 2020.

What is Text Mining?

Unstructured data needs to be interpreted by machines in order to understand human languages and extract meaning from this data, also known as natural language processing (NLP) – a genre of machine learning. Text mining uses NLP techniques to transform unstructured data into a structured format for identifying meaningful patterns and new insights.

A fitting example would be social media data analysis; since social media is becoming an increasingly valuable source of market and customer intelligence, it provides us raw data to analyse and predict customer needs. Text mining can also help us extract sentiment behind tweets and understand people’s emotions towards what is being sold.

Setting the scene

Which brings us to my analysis here on a dataset of tweets made regarding the US elections that took place in 2020. There were over a million tweets made about Donald Trump and Joe Biden which I put through R’s text mining tools to draw some interesting analytics and see how they measure up against the actual outcome – Joe Biden’s victory. My main aim was to perform sentiment analysis on these tweets to gain a consensus on what US citizens were feeling in the run up to the elections, and whether there was any correlation between these sentiments and the election outcome.

I found the Twitter data on Kaggle, containing two datasets: one of tweets made on Donald Trump and the other, Joe Biden. These tweets were collected using the Twitter API where the tweets were split according to the hashtags ‘#Biden’ and ‘#Trump’ and updated right until four days after the election – when the winner was announced after delays in vote counting. There was a total of 1.72 million tweets, meaning plenty of words to extract emotions from.

The process

I will outline the process of transforming the unstructured tweets into a more intelligible collection of words, from which sentiments could be extracted. But before I begin, there are some things I had to think about for processing this type of data in R:

1. Memory space – Your laptop may not provide you the memory space you need for mining a large dataset in RStudio Desktop. I used RStudio Server on my Mac to access a larger CPU for the size of data at hand.

2. Parallel processing – I first used the ‘parallel’ package as a quick fix for memory problems encountered creating the corpus. But I continued to use it for improved efficiency even after moving to RStudio Server, as it still proved to be useful.

3. Every dataset is different – I followed a clear guide on sentiment analysis posted by Sanil Mhatre. But I soon realised that although I understood the fundamentals, I would need to follow a different set of steps tailored to the dataset I was dealing with.

First, all the necessary libraries were downloaded to run the various transformation functions. tm, wordcloud, syuzhet are for text mining processes. stringr, for stripping symbols from tweets. parallel, for parallel processing of memory consuming functions. ggplot2, for plotting visualisations.

I worked on the Biden dataset first and planned to implement the same steps on the Trump dataset given everything went well the first time round. The first dataset was loaded in and stripped of all columns except that of tweets as I aim to use just tweet content for sentiment analysis.

The next steps require parallelising computations. First, clusters were set up based on (the number of processor cores – 1) available in the server; in my case, 8-1 = 7 clusters. Then, the appropriate libraries were loaded into each cluster with ‘clusterEvalQ’ before using a parallelised version of ‘lapply’ to apply the corresponding function to each tweet across the clusters. This is computationally efficient regardless of the memory space available.

So, the tweets were first cleaned by filtering out the retweet, mention, hashtag and URL symbols that cloud the underlying information. I created a larger function with all relevant subset functions, each replacing different symbols with a space character. This function was parallelised as some of the ‘gsub’ functions are inherently time-consuming.

A corpus of the tweets was then created, again with parallelisation. A corpus is a collection of text documents (in this case, tweets) that are organised in a structured format. ‘VectorSource’ interprets each element of the character vector of tweets as a document before ‘Corpus’ organises these documents, preparing them to be cleaned further using some functions provided by tm. Steps to further reduce complexity of the corpus text being analysed included: converting all text to lowercase, removing any residual punctuation, stripping the whitespace (especially that introduced in the customised cleaning step earlier), and removing English stopwords that do not add value to the text.

The corpus list had to be split into a matrix, known as Term Document Matrix, describing the frequency of terms occurring in each document. The rows represent terms, and columns documents. This matrix was yet too large to process further without removing any sparse terms, so a sparsity level of 0.99 was set and the resulting matrix only contained terms appearing in at least 1% of the tweets. It then made sense to cumulate sums of each term across the tweets and create a data frame of the terms against their calculated cumulative frequencies. I went on to only experiment with wordclouds initially to get a sense of the output words. Upon observation, I realised common election terminology and US state names were also clouding the tweets, so I filtered out a character vector of them i.e. ‘trump’, ‘biden’, ‘vote’, ‘Pennsylvania’ etc. alongside more common Spanish stopwords without adding an extra translation step. My criterion was to remove words that would not logically fit under any NRC sentiment category (see below). This removal method can be confirmed to work better than the one tm provides, which essentially rendered useless and filtered none of the specified words. It was useful to watch the wordcloud distribution change as I removed corresponding words; I started to understand whether the outputted words made sense regarding the elections and the process they were put through.

The entire process was executed several times, involving adjusting parameters (in this case: the sparsity value and the vector of forbidden words), and plotting graphical results to ensure its reliability before proceeding to do the same on the Trump dataset. The process worked smoothly and the results were ready for comparison.

The results

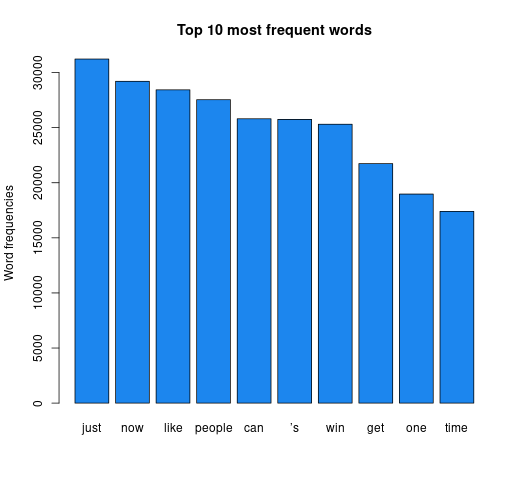

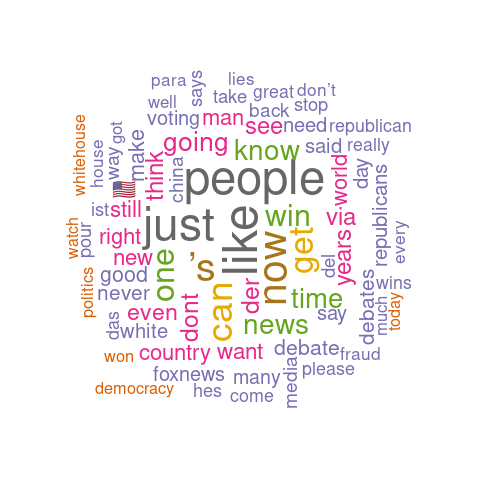

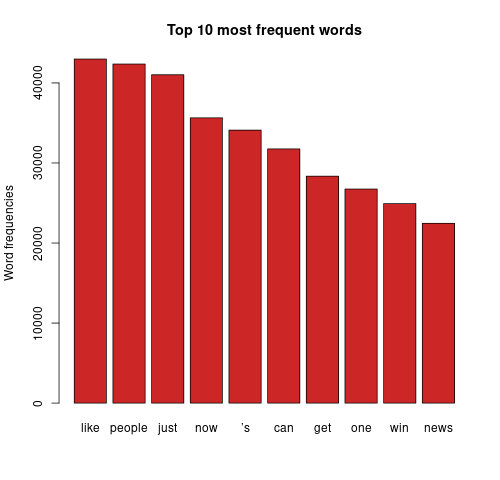

First on the visualisation list was wordclouds – a compact display of the 100 most common words across the tweets, as shown below.

The bigger the word, the greater its frequency in tweets. Briefly, it appears the word distribution for both parties are moderately similar, with the biggest words being common across both clouds. This can be seen on the bar charts on the right, with the only differing words being ‘time’ and ‘news’. There remain a few European stopwords tm left in both corpora, the English ones being more popular. However, some of the English ones can be useful sentiment indicators e.g., ‘can’ could indicate trust. Some smaller words are less valuable as they cause ambiguity in categorisation without a clear context e.g., ‘just’, ‘now’, and ‘new’ may be coming from ‘new york’ or pointing to anticipation for the ‘new president’. Nonetheless, there are some reasonable connections between the words and each candidate; some words in Biden’s cloud do not appear in Trump’s, such as ‘victory’, ‘love’, ‘hope’. ‘Win’ is bigger in Biden’s cloud, whilst ‘white’ is bigger in Trump’s cloud as well as occurrences of ‘fraud’. Although many of the terms lack context for us to base full judgement upon, we already get a consensus of the kind of words being used in connotation to each candidate.

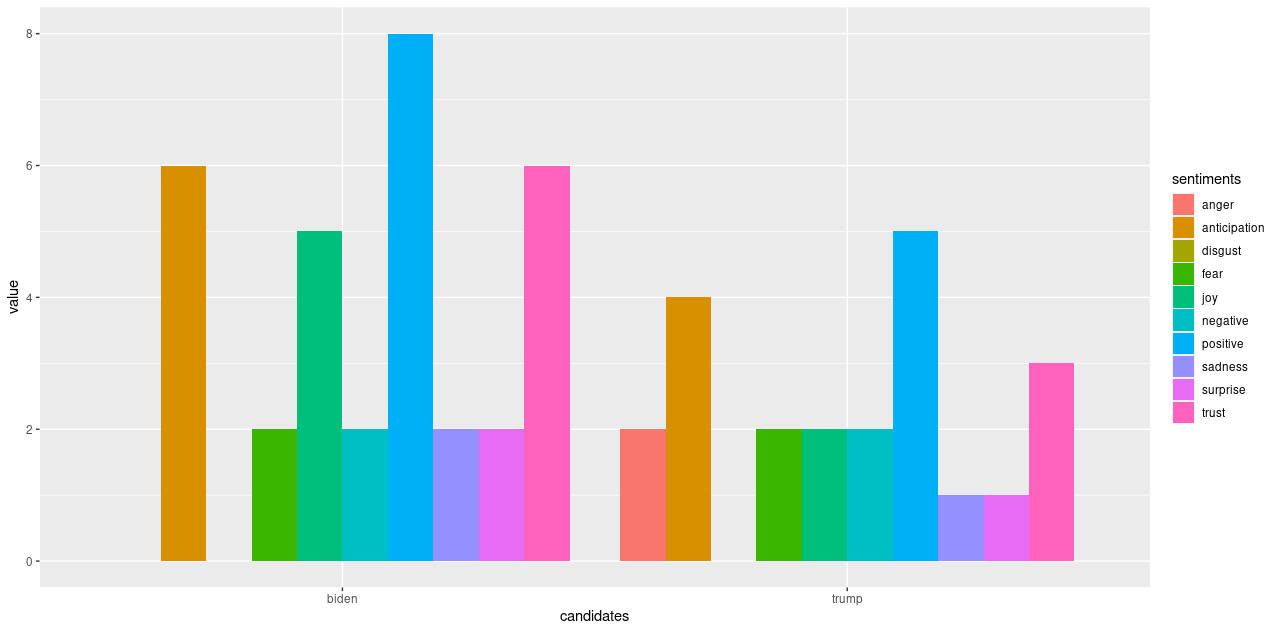

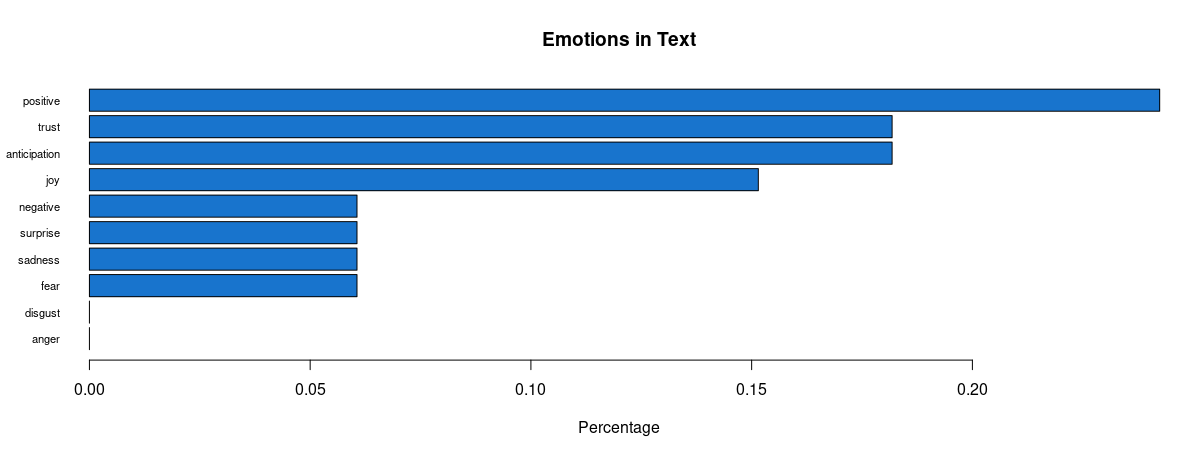

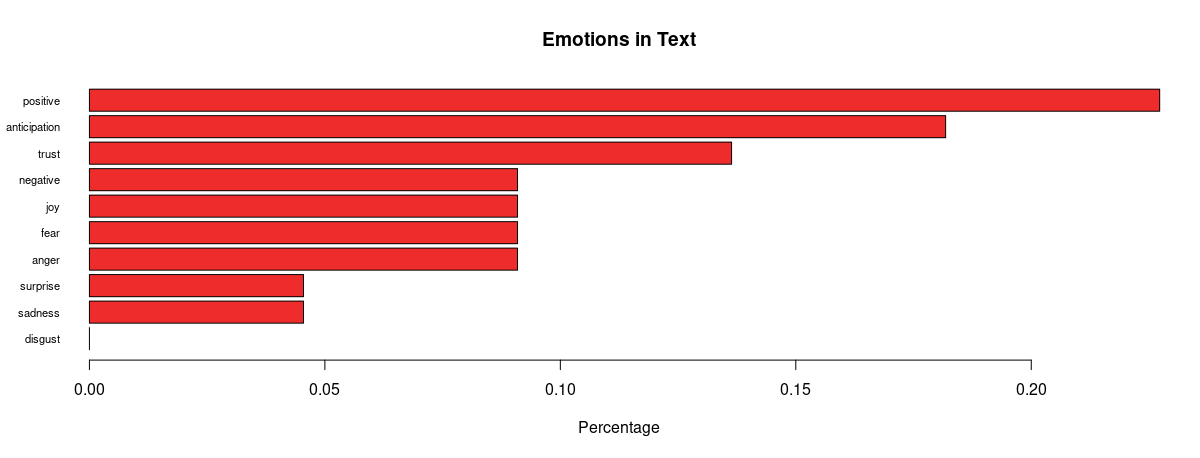

Analysing further, emotion classification was performed to identify the distribution of emotions present in the run up to the elections. The syuzhet library adopts the NRC Emotion Lexicon – a large, crowd-sourced dictionary of words tallied against eight basic emotions and two sentiments: anger, anticipation, disgust, fear, joy, sadness, surprise, trust, negative, positive respectively. The terms from the matrix were tallied against the lexicon and the cumulative frequency was calculated for each sentiment. Using ggplot2, a comprehensive bar chart was plotted for both datasets, as shown below.

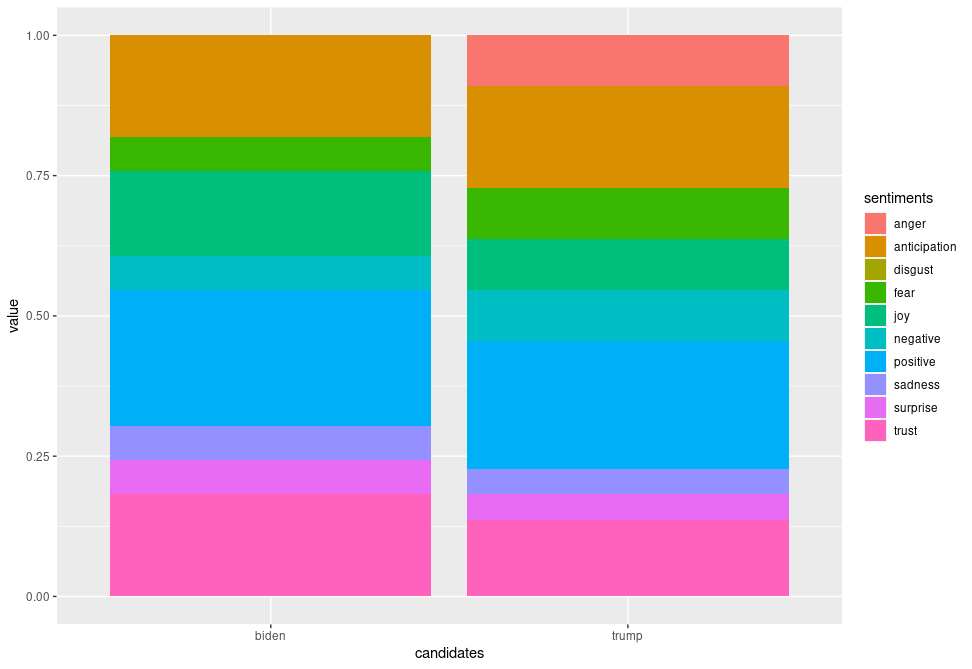

Some revealing insights can be drawn here. Straight away, there is an absence of anger and disgust in Biden’s plot whilst anger is very much present in that of Trump’s. There is 1.6 times more positivity and 2.5 times more joy pertaining Biden, as well as twice the amount of trust and 1.5 times more anticipation about his potential. This is strong data supporting him. Feelings of fear and negativity, however, are equal in both; perhaps the audience were fearing the other party would win, or even what America’s future holds regarding either outcome. There was also twice the sadness and surprise pertaining Biden, which also makes me wonder if citizens are expressing potential emotions they would feel if Trump won (since the datasets were only split based on hashtags), alongside being genuinely sad or surprised that Biden is one of their options.

In the proportional bar charts, there is a wider gap between positivity and negativity regarding Biden than of Trump, meaning a lower proportion of people felt negatively about Biden. On the other hand, there is still around 13% trust in Trump, and a higher proportion of anticipation about him. Only around 4% of the words express sadness and surprise for him which is around 2% lower than for Biden – intriguing. We also must remember to factor in the period after the polls opened when the results were being updated and broadcasted, which may have also affected people’s feelings – surprise and sadness may have risen for both Biden and Trump supporters whenever Biden took the lead. Also, there was a higher proportion fearing Trump’s position, and the anger may have also creeped in as Trump’s support coloured the bigger states.

Conclusions

Being on the other side of the outcome, it is more captivating to observe the distribution of sentiments across Twitter data collected through the election period. Most patterns we observed from the data allude to predicting Joe Biden as the next POTUS, with a few exceptions when a couple of negative emotions were also felt regarding the current president; naturally, not everyone will be fully confident in every aspect of his pitch. Overall, however, we saw clear anger only towards Trump along with less joy, trust and anticipation. These visualisations, plotted using R’s tm package in a few lines of code, helped us draw compelling insights that supported the actual election outcome. It is indeed impressive how text mining can be performed at ease in R (once the you have the technical aspects figured out) to create inferential results instantly.

Nevertheless, there were some limitations. We must consider that since the tweets were split according to the hashtags ‘#Biden’ and ‘#Trump’, there is a possibility these tweets appear in both datasets. This may mean an overspill of emotions towards Trump in the Biden dataset and vice versa. Also, the analysis would’ve been clearer if we contextualised the terms’ usage; maybe considering phrases instead would build a better picture of what people were feeling. Whilst plotting the wordclouds, as I filtered out a few foreign stopwords more crept into the cloud each time, which calls for a more solid translation step before removing stopwords, meaning all terms would then be in English. I also noted that despite trying to remove the “ ’s” character, which was in the top 10, it still filtered through to the end, serving as an anomaly in this experiment as every other word in my custom vector was removed.

This experiment can be considered a success for an initial dip into the world of text mining in R, seeing that there is relatively strong correlation between the prediction and the outcome. There are several ways to improve this data analysis which can be aided with further study into various areas of text mining, and then exploring if and how R’s capabilities can expand to help us achieve more in-depth analysis.

My code for this experiment can be found here.

3 Azure Cloud Technologies for Modernizing Legacy Applications

By now, cloud computing has gone from a cutting-edge technology to a business best practice for companies of all sizes and industries. While migrating to the cloud is generally seen as an advantageous move, too many companies aren’t sure what to do once they get there.

In particular, the cloud presents a new opportunity for you to transform and modernize your legacy IT. Below, we’ll discuss three different Microsoft Azure cloud technologies—Microsoft Flow, Microsoft Power Apps, and Azure Functions—and how you can use them to enact digital transformation within your own organization.

-

Microsoft Flow

Microsoft Flow is a tool for automating tasks and processes by connecting separate applications and services. Although Flow supports many cloud apps and services, it can also run on-premises, potentially serving as a “bridge” for your legacy IT.

Microsoft Flow makes it simpler for organizations to achieve business process automation (BPA) through built-in connectors between apps and services. Flow includes over 100 pre-built connectors, including Salesforce, Microsoft SQL Server, Outlook, Twitter, Slack, and many more. Flow also includes dozens of pre-built templates, such as saving Gmail attachments to Dropbox or sending weather updates to Microsoft’s Yammer social network.

The potential use cases of Microsoft Flow include:

- Sending a notification such as a text message or email when a certain event occurs (e.g., when a new customer prospect is added to Microsoft Dynamics CRM).

- Collecting data (e.g., monitoring social media for posts that mention a certain keyword, and then performing sentiment analysisto determine how popular opinion changes over time).

- Copying files between different locations (e.g., between SharePoint, Dropbox, and Microsoft OneDrive).

-

Microsoft Power Apps

Microsoft Power Apps is a low-code solution for building robust, production-ready, mobile-friendly enterprise software applications. Power Apps empowers even non-technical employees to create and launch the software they need to become more productive and effective in their jobs.

Power Apps benefits from a user-friendly, drag-and-drop visual interface, automatically handling the business logic behind the scenes. The Power Apps platform also easily integrates with other apps and services in the Microsoft ecosystem, including OneDrive, SharePoint, Microsoft SQL Server, and third-party services such as Google Docs and Oracle.

-

Azure Functions

Azure Functions is Microsoft Azure’s serverless computing solution. The term “serverless computing” is a bit of a misnomer: applications still run on servers, but developers don’t have to worry about the tasks of provisioning and maintaining them. Instead, smaller functions are run only in response to a given trigger or event, and then the server is shut down once execution is complete.

“Function as a service” solutions such as Azure Functions have multiple potential benefits:

- Applications are decomposed into smaller, lightweight functions.

- Functions are only executed on-demand, saving resources and cutting costs.

- Serverless computing requires zero support and maintenance obligations for developers.

Conclusion

Microsoft Flow, Microsoft Power Apps, and Azure Functions are three Azure cloud technologies that can help digitally transform your business—and we’ve just scratched the surface of what’s possible with Microsoft Azure. Here at Datavail, we should know: we’re a Microsoft Gold Partner who has already helped hundreds of our clients successfully migrate their applications and database workloads to the Azure cloud.

To learn how we used Flow, Power Apps, and Azure Functions for one client to modernize their CRM software, download our case study “Financial Services Company Modernizes Their CRM with Azure Cloud.” You can also get in touch with our team of Azure experts today for a chat about your own business needs and objectives.

The post 3 Azure Cloud Technologies for Modernizing Legacy Applications appeared first on Datavail.

Which Is Best for Your MariaDB Cloud Migration: Azure or an Azure VM?

MariaDB is a powerful open-source relational database that can expand to even more functionality when you migrate it to Azure Database for MariaDB. This Microsoft cloud service is built off the community edition and offers a fully managed experience suitable for many use cases.

The Benefits of Moving to Azure for MariaDB

Azure’s cloud platform offers many benefits, especially if you’re migrating an on-premises MariaDB database.

- Scaling your capacity on an as-needed basis, with changes happening within seconds.

- High availability is built-in to the platform and doesn’t require additional costs to implement.

- Cost-effective pay-as-you-go pricing model.

- Your backup and recovery processes are covered, with support for point-in-time restoration reaching up to 35 days.

- Enterprise-grade security that keeps your data safe whether it’s in transit or at rest.

- The underlying infrastructure is managed, and many processes are automated to reduce administrative load on your end.

- Continue using the MariaDB tools and technology that you’re familiar with while taking advantage of Microsoft Azure’s robust platform.

Migration Options for Azure Database for MariaDB

If you want to move your MariaDB databases to Azure, you have two migration options: Azure Database for MariaDB or MariaDB on an Azure-hosted virtual machine. The right choice for your organization depends on the feature set you’re looking for and how much control you want over the underlying infrastructure.

Azure Database for MariaDB

Azure Database for MariaDB is a Database as a Service (DBaaS) and handles the vast majority of underlying administration and maintenance for your databases. The platform uses MariaDB Community Edition. The pricing model for this service is pay-as-you-go, and you’re able to scale your resources easily based on your current demand.

You have high availability databases right from the start of your service, and you never need to worry about applying patches, restoring databases in the event of an outage, or fixing failed hardware.

Since this migration option is a fully managed service, you have several limitations to contend with. These include:

- You cannot use MyISAM, BLACKHOLE, or ARCHIVE for your storage engine.

- No direct access to the underlying file system.

- Several roles are restricted or not supported, such as DBA, SUPER privilege, and DEFINER.

- Unable to change the MySQL system database.

- Server storage size only scales up, not down.

- You can’t use dynamic scaling to or from Basic pricing tiers.

- Password plugins are not supported.

- Several minimum and maximum database values are dictated by your pricing tier.

MariaDB on Azure VM

MariaDB on Azure VM is an Infrastructure as a Service solution. Azure fully manages the virtual machine, but you retain control over everything else. One of the biggest benefits of this migration option is that it allows you to use the exact MariaDB version that you want to. You’re also able to make the optimizations and tweaks needed to get the most out of your database engine.

The biggest drawback of MariaDB on Azure VM is that you’re controlling everything other than the VM itself. You have a significant amount of configuration and maintenance tasks that your database administrators are responsible for.

Which MariaDB Migration Option Is Best for Your Organization?

Both MariaDB migration options have their merits. The right option for your organization varies based on your use cases, the technical resources at your disposal, your budget, and the pace of your MariaDB project.

Azure Database for MariaDB

- Azure Database for MariaDB is billed hourly with fixed, predictable rates. It offers a more cost-effective experience than a comparable Azure VM deployment.

- Another area of cost savings comes from not needing to manage the underlying infrastructure. Azure handles the database engine, hardware, operating system, and software needed to run MariaDB. Your database administrators don’t need to worry about patching, configuring, or troubleshooting anything relating to this part of the infrastructure. They can focus on optimizing your databases, tuning queries and indexes, configuring sign-ins, and handling audits.

- Your databases gain built-in high availability that comes with a 99.99% SLA guarantee.

- All of your MariaDB data is replicated automatically as part of the disaster recovery functionality.

- In the event of a server failure, your MariaDB database has a transparent fail-over in place.

- Azure Database for MariaDB scales seamlessly to handle workloads, with managed load balancing.

- Use the data-in replication feature to create a hybrid MariaDB environment. You’re able to sync data from an external MariaDB server to Azure Database for MariaDB. The external server can be a virtual machine, an on-premises server, or even another cloud database service.

- Advanced threat detection helps to identify potential cyberattacks proactively to protect your databases better.

- Customizable monitoring and alerting keep you in the loop on your database’s activity.

- The ideal use cases for Azure Database for MariaDB include projects that require a fast time to market, cost-conscious organizations, organizations with limited technical resources, workloads that need to handle fluctuating demands, and organizations that want an easy-to-deploy managed database.

MariaDB on Azure VM

- Your costs include the provisioned VM, the MariaDB license, and the staff to handle the administrative overhead. However, these expenses are often less than an on-premises deployment.

- You can run any version of MariaDB, which offers more flexibility than the managed database service.

- You can achieve more than 99.99% database availability by configuring more high availability features for your MariaDB database, although this will drive up the cost.

- You have full control over when you update the underlying operating system and database software, which allows you to plan around new features and maintenance windows. Azure VM provides automated functionality that streamlines some of these processes.

- Install additional software to support your MariaDB deployment.

- Define the exact size of the VM, the number of disks, and the storage configuration used for your MariaDB databases.

- Zone redundancy is supported with this migration option.

- The ideal use cases for MariaDB on Azure VM migrating existing on-premises applications and databases into the cloud without needing to rearchitect any layers.

Preparing for Your MariaDB Azure Migration

Moving your on-premises MariaDB database to one of Azure’s cloud-based options is a complex process. Datavail is here to help with that process. We’re a Microsoft Gold Partner and one of Microsoft’s Preferred Azure Partners for Enterprise and Mid-Market companies. With over 50 Azure certified architects and engineers, we can take you from start to finish and beyond with your migration. Contact us to learn more about our Azure services and MariaDB expertise.

The post Which Is Best for Your MariaDB Cloud Migration: Azure or an Azure VM? appeared first on Datavail.

Microsoft Dynamics CRM: Should You Migrate to the Cloud?

Microsoft Dynamics CRM is Microsoft’s enterprise solution for customer relationship management, helping you keep track of all your contacts and relationships with clients and prospective clients. But as Microsoft increasingly becomes a cloud-first company, should you migrate Dynamics CRM from your on-premises deployment to the Microsoft Azure cloud?

Thinking about migrating Dynamics CRM to the D365 in Azure is especially timely, given a few important end of support (EOS) dates:

- Mainstream support for Dynamics CRM 2016has already ended in January 2021.

- Extended support for Dynamics CRM 2011ended in July 2021.

If you don’t want to fall out of compliance, or miss out on new features and functionality, and expose yourself to security risks, you need to upgrade your Dynamics CRM software—but should you remain on-premises or move to the Azure cloud? In this article, we’ll discuss the factors to consider, as well as a few tips for your Dynamics CRM cloud migration.

Should You Migrate Dynamics CRM to the Cloud?

For most Dynamics CRM users, migrating to the Azure cloud will be the right choice. Migrating Dynamics CRM on-premises to Microsoft Dynamics 365 Online comes with several benefits, including:

- Higher availability: Microsoft guarantees 99.9 percent uptime for Dynamics 365 Online—that’s a total of less than 9 hours of downtime for every year you use the software.

- Greater flexibility: Cloud solutions such as Dynamics 365 Online are accessible from anywhere, at any time, improving users’ flexibility and productivity.

- Cloud integrations: In the Azure cloud, it’s easier to integrate your Dynamics CRM data with your enterprise resource planning (ERP) solution, as well as other apps and services in the Microsoft ecosystem such as Office 365 and Power BI.

- Fewer maintenance obligations: Cloud vendors are responsible for application support and maintenance, so your IT team doesn’t have to worry about unexpected problems and crashes.

Of course, your own business goals and requirements may be different—so be sure to speak with a trusted Azure migration partner who can help you decide on the best path forward.

Tips for a Dynamics 365 Azure Cloud Migration

- Understand the new features and functionality that will be available with your move to the cloud. This is especially important for organizations who are still on an older on-premises version of Dynamics CRM, and thus haven’t used this new functionality before. Consider how you might leverage features such as automation to improve employees’ productivity and efficiency.

- Take the time to review your on-premises enterprise data before proceeding with the cloud migration. Is there unused or out-of-date information that needs to be pruned? How will the on-premises CRM database map to the Dynamics 365 database in the cloud?

- Additionally, consider combining your Dynamics CRM cloud migration with another Azure cloud migration, such as Office 365.

Conclusion

Moving your Dynamics CRM deployment to the cloud is no easy feat—which is why you’ll likely need a Dynamics CRM cloud migration partner. As a Microsoft Gold Partner, Datavail has helped hundreds of our clients successfully migrate their applications and data to the Azure cloud.

To learn how we helped one client move their on-premises Dynamics CRM deployment to the cloud, check out Datavail’s case study “Financial Services Company Modernizes Their CRM with Azure Cloud.” You can also get in touch with our team of Azure experts today for a chat about your own business needs and objectives.

The post Microsoft Dynamics CRM: Should You Migrate to the Cloud? appeared first on Datavail.

The Choice Between Virtualization and Containerization

Virtualization and containerization both offer ways for software developers to isolate environments from the physical infrastructure, but they use different approaches to achieve this goal. Choosing between virtualization and containerization depends on knowing the strengths and weaknesses of both, and the most applicable use cases.

What are Virtual Machines and Containers?

Let’s start with the basics of each technology.

Virtual Machines

A virtual machine (VM) provides an environment that acts as a full physical computer system. It does this through hypervisor software, which virtualizes the underlying hardware for use by the VMs. They have their own operating systems, libraries, and kernels you can use for software development and other purposes.

You may only have one physical server, but you can run multiple VMs that emulate a full PC environment. The VMs are isolated from one another and the underlying physical server operating system.

Containers

Containers virtualize the operating system, rather than the underlying hardware. They are isolated environments, but they share the host operating system and other resources, such as libraries. To implement containers, you need containerization technology software.

Container Pros and Cons

Pros

- Portability: Containers share the host operating system and associated resources, so their overall size is small. A container typically has the application and any required dependencies. Moving containers is a simple, resource-light task.

- Optimized resource allocation: You only need a single copy of the operating system for all of your containers, so you use fewer hardware resources compared to a VM. When you’re working with a tight software development budget, being able to pare down the overall resources required for the project can go a long way.

- Easy to update individual software components: If you need to make changes to a specific container, it’s easy to do so. You’re able to redeploy your containers without involving the rest of the application. Patching security holes and adding new functionality is a simple process with this technology.

- Seamless horizontal scaling: Many container orchestration platforms offer scaling for your containers, so you can add the right number of pods for your application. You only scale the containers that require more resources, so it’s a cost-efficient way of handling different software components.

Cons

- Less isolated: Containers share vital resources such as the operating system kernels and libraries, so they have less isolation between the environments. If you’re trying to maximize your security measures, the lack of full isolation may not be ideal for your development requirements.

- You’re limited to one operating system: You’re not able to spin up different operating systems for containerization. The host operating system is the only one available for containers, and any changes to it impacts the full environment.

Virtual Machine Pros and Cons

Pros

- Full isolation for better security: Fully isolated environments stop VMs from impacting one another. A problem with one of the operating systems will not spill over into the other environments.

- Supports multiple operating systems: You can set up as many operating systems as you’d like on your system. You can develop software for multiple operating systems without needing additional hardware, which also makes testing your applications easier.

- Potential for greater overall capacity: VMs typically have more resources than comparable containerization environments, as VMs need everything from the operating system to virtualized hardware for each instance. If you’re not using the maximum capacity of your VM hardware, you can leverage the overage for higher-resource processes.

Cons

- Less portability: The fully isolated VM environment comes at the cost of portability. You may be working with VMs that are packaged into multiple gigabytes, depending on the resources that it has available. You’re not able to quickly move this software to a new destination, and shifting it is a more complicated process than containers.

- Lack of resource optimization: Your VM environments have many of the same resources, duplicated for each instance. This lack of optimization can increase your costs, and you have to predict the overall capacity for all of your environments when configuring the VMs.

- More OS maintenance requirements: The more operating systems you have, the more updates you need to stay on top of. This con is minimal if you have a limited number of environments, but it can quickly add up.

Matching Virtualization and Containerization to the Ideal Use Cases

Get the most out of both technologies by learning more about the use cases at which they excel. They’re valuable tools for every software development team, and using them effectively can help you meet your application goals.

Containers work best for applications that are cloud-native, have multi-cloud deployments, need horizontal scalability, have frequent updates, and have less stringent security requirements.

Virtualization works best when you have high-security requirements, use more than one operating system, have resource-intensive operations, and may or may not be deploying the application to a single cloud.

Using Containers and VMs Together

Containerization and virtualization are not an either/or prospect. You can use both together to great effect. In this configuration, the container’s host operating system is powered by a virtual machine. You’re able to create a dedicated, isolated environment for your containers while sharing the hardware resources with other VM environments.

If you’d like to learn more about choosing containers, VMs, or both, contact us at Datavail. We have the technical insight you need to make the best decision for your business goals.

The post The Choice Between Virtualization and Containerization appeared first on Datavail.