Sql2Odi now supports the WITH statement

The Rittman Mead's Sql2Odi tool that converts SQL SELECT statements to ODI Mappings, now supports the SQL WITH statement as well. (For an overview of our tool's capabilities, please refer to our blog posts here and here.)

The Case for WITH Statements

If a SELECT statement is complex, in particular if it queries data from multiple source tables and relies on subqueries to do so, there is a good chance that rewriting it as a WITH statement will make it easier to read and understand. Let me show you what I mean...

SELECT

LAST_NAME,

FIRST_NAME,

LAST_NAME || ' ' || FIRST_NAME AS FULL_NAME,

AGE,

COALESCE(LARGE_CITY.CITY, ALL_CITY.CITY) CITY,

LARGE_CITY.POPULATION

FROM

ODI_DEMO.SRC_CUSTOMER CST

INNER JOIN ODI_DEMO.SRC_CITY ALL_CITY ON ALL_CITY.CITY_ID = CST.CITY_ID

LEFT JOIN (

SELECT

CITY_ID,

UPPER(CITY) CITY,

POPULATION

FROM ODI_DEMO.SRC_CITY

WHERE POPULATION > 750000

) LARGE_CITY ON LARGE_CITY.CITY_ID = CST.CITY_ID

WHERE AGE BETWEEN 25 AND 45This is an example from my original blog posts. Whilst one could argue that the query is not that complex, it does contain a subquery, which means that the query does not read nicely from top to bottom - you will likely need to look at the subquery first for the master query to make sense to you.

Same query, rewritten as a WITH statement, looks like this:

WITH

BASE AS (

SELECT

LAST_NAME,

FIRST_NAME,

LAST_NAME || ' ' || FIRST_NAME AS FULL_NAME,

AGE,

CITY_ID

FROM

ODI_DEMO.SRC_CUSTOMER CST

),

LARGE_CITY AS (

SELECT

CITY_ID,

UPPER(CITY) CITY,

POPULATION

FROM ODI_DEMO.SRC_CITY

WHERE POPULATION > 750000

),

ALL_DATA AS (

SELECT

LAST_NAME,

FIRST_NAME,

FULL_NAME,

AGE,

COALESCE(LARGE_CITY.CITY, ALL_CITY.CITY) CITY,

LARGE_CITY.POPULATION

FROM

BASE CST

INNER JOIN ODI_DEMO.SRC_CITY ALL_CITY ON ALL_CITY.CITY_ID = CST.CITY_ID

LEFT JOIN LARGE_CITY ON LARGE_CITY.CITY_ID = CST.CITY_ID

WHERE AGE BETWEEN 25 AND 45

)

SELECT * FROM ALL_DATAWhilst it is longer, it reads nicely from top to bottom. And the more complex the query, the more the comprehensibility will matter.

The first version of our Sql2Odi tool did not support WITH statements. But it does now.

Convert a WITH Statement to an ODI Mapping

The process is same old - first we add the two statements to our metadata table, add some additional data to it, like the ODI Project and Folder names, the name of the Mapping, the Target table that we want to populate and how to map the query result to the Target table, names of Knowledge Modules and their config, etc.

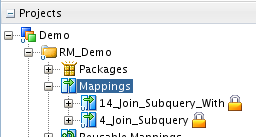

After running the Sql2Odi Parser, which now happily accepts WITH statements, and the Sql2Odi ODI Content Generator, we end up with two mappings:

What do we see when we open the mappings?

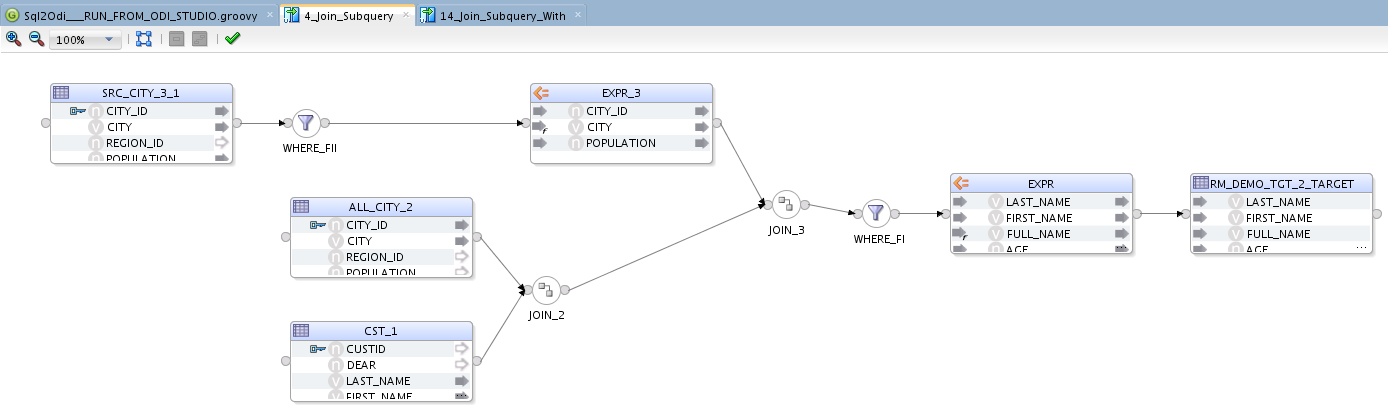

The original SELECT statement based mappings is generated like this:

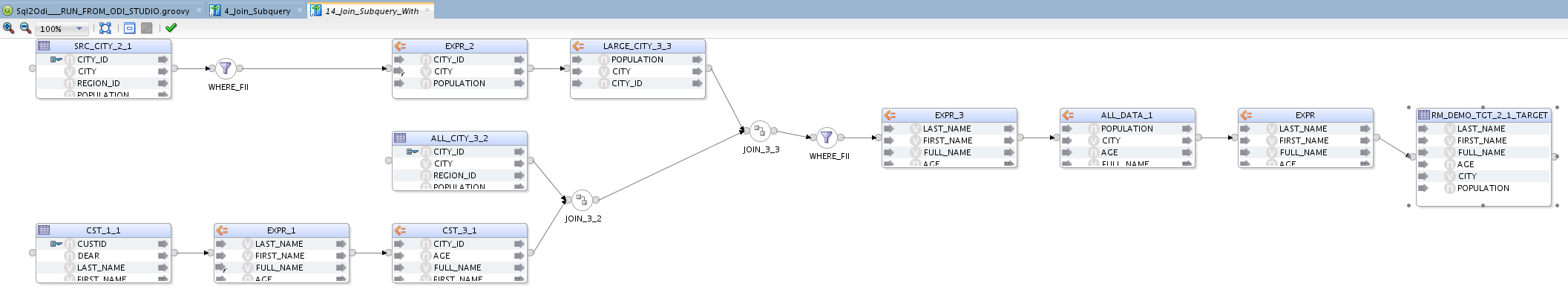

The new WITH statement mapping, though it queries the same data in pretty much the same way, is more verbose:

The additional EXPRESSION components are added to represent references to the WITH subqueries. While the mapping is now busier than the original SELECT, there should be no noticeable performance penalty. Both mappings generate the exact same output.

The Hybrid Cloud for Databases: Best of Both Worlds?

Cloud computing has long since gone from a cutting-edge technology to a well-established best practice for organizations of all sizes and industries.

According to Flexera’s 2020 State of the Cloud Report, 98 percent of businesses now use at least one public or private cloud. In particular, migrating your databases to the cloud can make them more scalable, more available, and easier to integrate with the rest of your cloud infrastructure.

But what happens when your enterprise data can’t be moved to the cloud, for reasons such as data security or compliance?

Whether you need to keep your data on-premises due to external regulations or organizational preference, the good news is that you can still continue to enjoy the benefits of the cloud. The solution: a hybrid cloud/on-premises system that combines the best of both worlds.

Use Cases for a Hybrid Cloud Database

What does a database in the hybrid cloud look like? There are as many answers to this question as there are uses of hybrid cloud designs.

For example, some organizations may choose a hybrid cloud data storage solution for purposes of disaster recovery and business continuity, preventing a single point of failure. Businesses using this configuration keep file backups in two places (in the cloud and on-premises). After a data loss event, on-premises backups can be restored more quickly than cloud backups, which first need to be downloaded over the internet. On the other hand, cloud backups remain securely on a remote server to protect data in the event of a natural disaster that destroys on-premises equipment.

Another reason to use a hybrid cloud database: many organizations and industries are subject to laws regarding data sovereignty, which requires information to stay within the borders of the country, region, or territory in which it was collected or processed. Because public cloud servers may be distributed in many different geographic locations, data sovereignty requirements essentially put a damper on your ability to move this information to the cloud.

In still other cases, organizations aren’t technically subject to cloud data regulations, but still would rather keep their enterprise data on-premises. Often these preferences are based on outdated concerns about the risk of a data breach. With cloud adoption growing, however, these worries have largely fallen by the wayside. According to a 2019 survey, 61 percent of IT security executives believe that the risk of a data breach is the same or lower in the cloud as it is on-premises.

Regardless of why you choose a hybrid cloud database, maintaining your data on-premises still allows you to enjoy a virtually seamless experience in the cloud. However, setting up this seamless hybrid cloud experience is easier said than done, so it’s best to join forces with an experienced cloud managed services partner.

Wondering if a hybrid cloud database is right for you? You’re not alone. By speaking with a skilled, knowledgeable cloud migration partner like Datavail, you can decide on the right path forward to meet your business needs and objectives.

Case Study: Major Utility Company

One of Datavail’s clients, a major Canadian utility company, was faced with this question when moving its website from on-premises hosting to the Microsoft Azure cloud. The client knew that a cloud migration would tremendously improve the site’s availability, scalability, and elasticity—which are especially important concerns during times of peak usage, such as storms and widespread power outages.

However, the client also faced regulatory compliance issues about data sovereignty, which meant that its core customer data needed to be stored on-premises, not in the cloud. Due to this requirement, the client had originally envisioned the project as an on-premises upgrade rather than a cloud migration.

Datavail worked with the client to design and implement a hybrid cloud solution that uplifted the website infrastructure to the cloud, while leaving customer data on-premises, housed in their SAP database, to meet the client’s regulatory requirements. This hybrid cloud design leverages the best aspects of cloud and premises and can be applied in any situation where data sovereignty, data security, and regulatory compliance are important concerns—not only for utility companies, but also in other industries such as healthcare and finance.

Looking for a hybrid cloud solution for your next project? Find out how we can help by reading Datavail’s case study “Major Utility Company Improves Residential Customer Website Experience with Azure.”

The post The Hybrid Cloud for Databases: Best of Both Worlds? appeared first on Datavail.

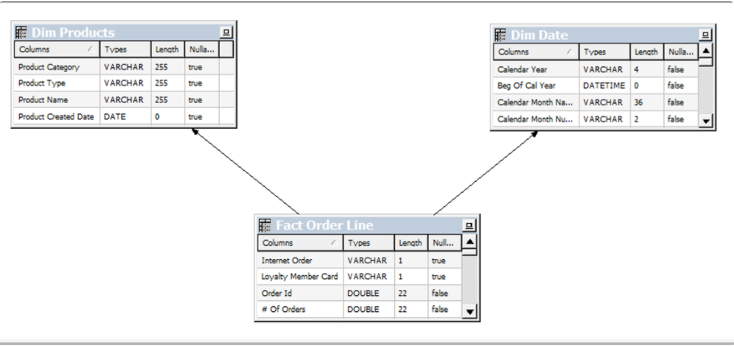

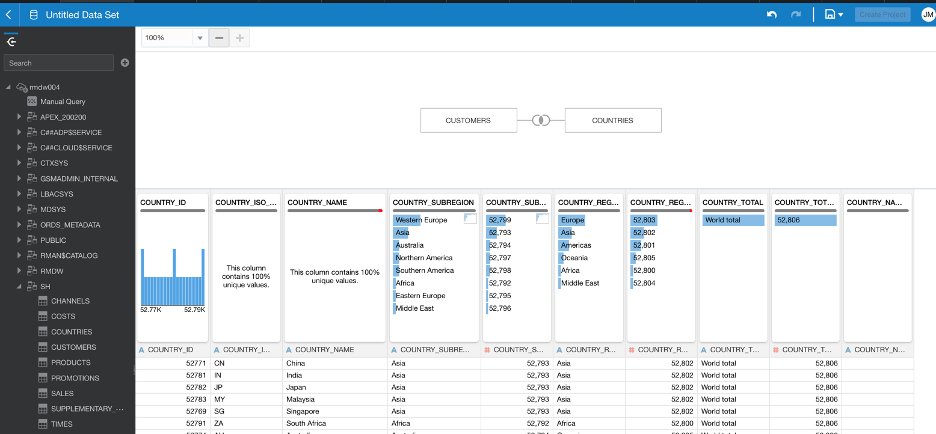

Joining Data in OAC

One of the new features in OAC 6.0 was Multi Table Datasets, which provides another way to join tables to create a Data Set.

We can already define joins in the RPD, use joins in OAC’s Data Flows and join Data Sets using blending in DV Projects, so I went on a little quest to compare the pros and cons of each of the methods to see if I can conclude which one works best.

What is a data join?

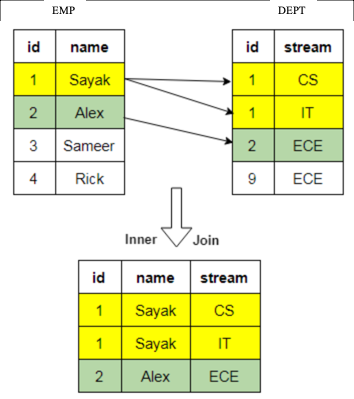

Data in databases is generally spread across multiple tables and it is difficult to understand what the data means without putting it together. Using data joins we can stitch the data together, making it easier to find relationships and extract the information we need. To join two tables, at least one column in each table must be the same. There are four available types of joins I’ll evaluate:

1. Inner join - returns records that have matching values in both tables. All the other records are excluded.

2. Left (outer) join - returns all records from the left table with the matched records from the right table.

3. Right (outer) join - returns all records from the right table with the matched records from the left table.

4. Full (outer) join - returns all records when there is a match in either left or right tables.

Each of the three approaches give the developer different ways and places to define the relationship (join) between the tables. Underpinning all of the approaches is SQL. Ultimately, OAC will generate a SQL query that will retrieve data from the database, so to understand joins, let’s start by looking at SQL Joins

SQL Joins

In an SQL query, a JOIN clause is used to execute this function. Here is an example:

SELECT EMP.id, EMP.name, DEPT.stream

FROM EMP

INNER JOIN DEPT ON DEPT.id = EMP.id;

Now that we understand the basic concepts, let’s look at the options available in OAC.

Option 1: RPD Joins

The RPD is where the BI Server stores its metadata. Defining joins in the RPD is done in the Admin Client Tool and is the most rigorous of the join options. Joins are defined during the development of the RPD and, as such, are subject to the software development lifecycle and are typically thoroughly tested.

End users access the data through Subject Areas, either using classic Answers and Dashboards, or DV. This means the join is automatically applied as fields are selected, giving you more control over your data and, since the RPD is not visible to end-users, avoiding them making any incorrect joins.

The main downside of defining joins in the RPD is that it’s a slower process - if your boss expects you to draw up some analyses by the end of the day, you may not make the deadline using the RPD. It takes time to organise data, make changes, then test and release the RPD.

Join Details

The Admin Client Tool allows you to define logical and physical tables, aggregate table navigation, and physical-to-logical mappings. In the physical layer you define primary and foreign keys using either the properties editor or the Physical Diagram window. Once the columns have been mapped to the logical tables, logical keys and joins need to be defined. Logical keys are generally automatically created when mapping physical key columns. Logical joins do not specify join columns, these are derived from the physical mappings.

You can change the properties of the logical join; in the Business Model Diagram you can set a driving table (which optimises how the BI Server process joins when one table is smaller than the other), the cardinality (which expresses how rows in one table are related to rows in the table to which it is joined), and the type of join.

Driving tables only activate query optimisation within the BI Server when one of the tables is much smaller than the other. When you specify a driving table, the BI Server only uses it if the query plan determines that its use will optimise query processing. In general, driving tables can be used with inner joins, and for outer joins when the driving table is the left table for a left outer join, or the right table for a right outer join. Driving tables are not used for full outer joins.

The Physical Diagram join also gives you an expression editor to manually write SQL for the join you want to perform on desired columns, introducing complexity and flexibility to customise the nature of the join. You can define complex joins, i.e. those over non-foreign key and primary key columns, using the expression editor rather than key column relationships. Complex joins are not as efficient, however, because they don’t use key column relationships.

It’s worth addressing a separate type of table available for creation in the RPD – lookup tables. Lookup tables can be added to reference both physical and logical tables, and there are several use cases for them e.g., pushing currency conversions to separate calculations. The RPD also allows you to define a logical table as being a lookup table in the common use case of making ID to description mappings.

Lookup tables can be sparse and/or dense in nature. A dense lookup tables contains translations in all languages for every record in the base table. A sparse lookup table contains translations for only some records in the base tables. They can be accessed via a logical calculation using DENSE or SPARSE lookup function calls. Lookup tables are handy as they allow you to model the lookup data within the business model; they’re typically used for lookups held in different databases to the main data set.

Multi-database joins allow you to join tables from across different databases. Even though the BI Server can optimise the performance of multi-database joins, these joins are significantly slower than those within a single database.

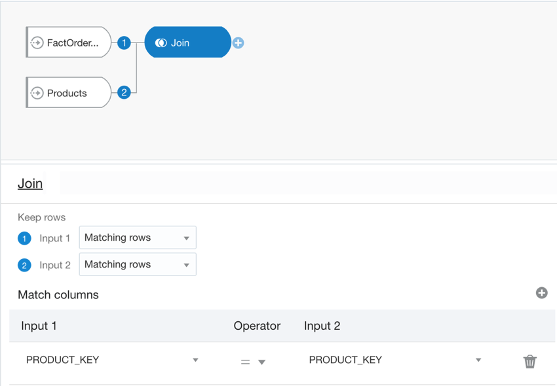

Option 2: Data Flow Joins

Data Flows provide a way to transform data in DV. The data flow editor gives us a canvas where we can add steps to transform columns, merge, join or filter data, plot forecasts or apply various models on our datasets.

When it comes to joining datasets, you start by adding two or more datasets. If they have one or more matching columns DV automatically detects this and joins them; otherwise, you have to manually add a ‘Join’ step and provided the columns’ data types match, a join is created.

A join in a data flow is only possible between two datasets, so if you wanted to join a third dataset you have to create a join between the output of the first and second tables and the third, and so on. You can give your join a name and description which would help keep track if there are more than two datasets involved. You can then view and edit the join properties via these nodes created against each dataset. DV gives you the standard four types of joins (Fig. 1), but they are worded differently; you can set four possible combinations for each input node by toggling between ‘All rows’ and ‘Matching rows’. That means:

Join type | Node 1 | Node 2 |

Inner join | Matching rows | Matching rows |

Left join | All rows | Matching rows |

Right join | Matching rows | All rows |

Full join | All rows | All rows |

The table above explains which type of join can be achieved by toggling between the two drop-down options for each dataset in a data flow join.

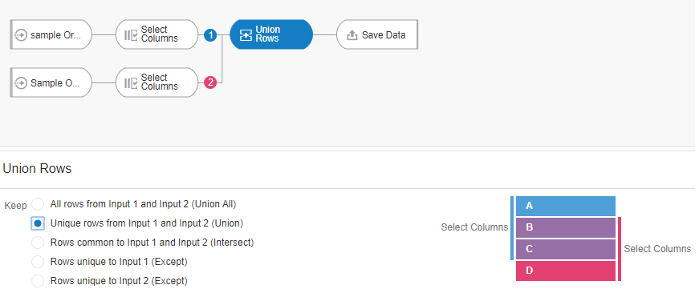

It’s worth mentioning there is also an operator called ‘Union Rows’. You can concatenate two datasets, provided they have the same number of columns with compatible datatypes. There are a number of options to decide how you want to combine the rows of the datasets.

One advantage of data flows is they allow you to materialise the data i.e. save it to disk or a database table. If your join query takes 30 minutes to run, you can schedule it to run overnight and then reports can query the resultant dataset.

However, there are limited options as to the complexity of joins you can create:

- the absence of an expression editor to define complex joins

- you cannot join more than two datasets at a time.

You can schedule data flows which would allow you to materialise the data overnight ready for when users want to query the data at the start of the day.

Data Flows can be developed and executed on the fly, unlike the longer development lifecycle of the RPD.

It should be noted that Data Flows cannot be shared. The only way around this is to export the Data Flow and have the other user import and execute it. The other user will need to be able to access the underlying Data Sets.

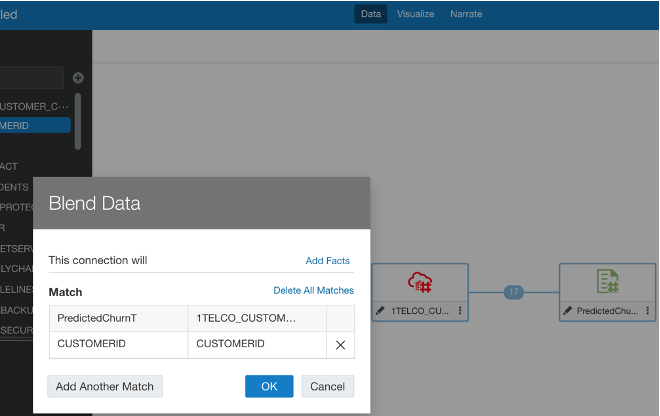

Option 3: Data Blending

Before looking at the new OAC feature, there is a method already present for cross-database joins which is blending data.

Given at least two data sources, for example, a database connection and an excel spreadsheet from your computer, you can create a Project with one dataset and add the other Data Set under the Visualise tab. The system tries to find matches for the data that’s added based on common column names and compatible data types. Upon navigating back to the Data tab, you can also manually blend the datasets by selecting matching columns from each dataset. However, there is no ability to edit any other join properties.

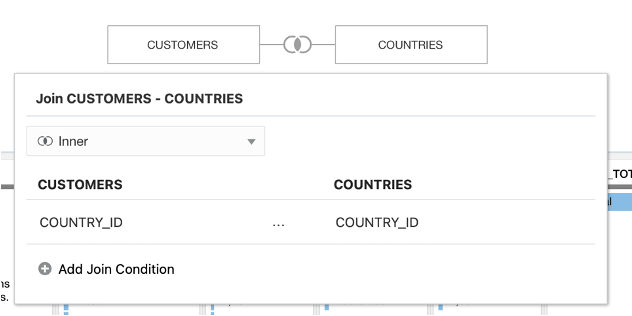

Option 4: Multi Table Datasets

Lastly, let’s look at the newly added feature of OAC 6.0: Multi Table Datasets. Oracle have now made it possible to join several tables to create a new Data Set in DV.

Historically you could create Data Sets from a database connection or upload files from your computer. You can now create a new Data Set and add multiple tables from the same database connection. Oracle has published a list of compatible data sources.

Once you add your tables DV will automatically populate joins, if possible, on common column names and compatible data types.

The process works similarly to how joins are defined in Data Flows; a pop-up window displays editable properties of the join with the same complexity - the options to change type, columns to match, the operator type relating them, and add join conditions.

The data preview refreshes upon changes made in the joins, making it easy to see the impact of joins as they are made.

Unlike in the RPD, you do not have to create aliases of tables in order to use them for multiple purposes; you can directly import tables from a database connection, create joins and save this Multi Table Dataset separately to then use it further in a project, for example. So, the original tables you imported will retain their original properties.

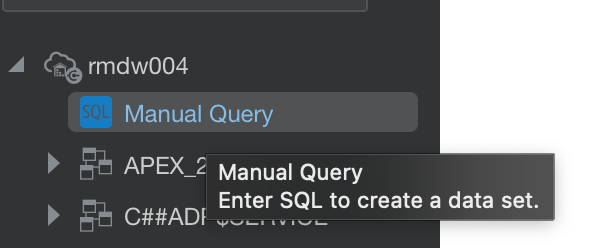

If you need to write complex queries you can use the Manual SQL Query editor to create a Data Set, but you can only use the ‘JOIN’ clause.

So, what’s the verdict?

Well, after experimenting with each type of joining method and talking to colleagues with experience, the verdict is: it depends on the use case.

There really is no right or wrong method of joining datasets and each approach has its pros and cons, but I think what matters is evaluating which one would be more advantageous for the particular use case at hand.

Using the RPD is a safer and more robust option, you have control over the data from start to end, so you can reduce the chance of incorrect joins. However, it is considerably slower and make not be feasible if users demand quick results. In this case, using one of the options in DV may seem more beneficial.

You could either use Data Flows, either scheduled or run manually, or Multi Table Datasets. Both approaches have less scope for making complex joins than the RPD. You can only join two Data Sets at a time in the traditional data flow, and you need a workaround in DV to join data across database connections and computer-uploaded files; so if time and efficiency is of essence, these can be a disadvantage.

l would say it’s about striking a balance between turnaround time and quality - of course both good data analysis in good time is desirable, but when it comes to joining datasets in these platforms it will be worth evaluating how the use case will benefit from either end of the spectrum.

4 Methods for Migrating Oracle Databases to Microsoft Azure

When you migrate an Oracle database to Microsoft Azure’s cloud services, you need to choose the most suitable method for the task. The right option for your database migration project varies based on your available technical resources, the complexity of your infrastructure, and your intended use cases.

Cross-Cloud Connectivity

You have a relatively easy migration ahead of you if you already use Oracle Cloud Infrastructure. Microsoft Azure provides cross-cloud connectivity, so your Oracle databases connect directly to the Azure service. This method is not a true migration, as the data remains on Oracle Cloud Infrastructure. If you’re trying to move away from Oracle’s cloud services or don’t want a multi-cloud deployment, then this would not be an ideal choice.

Lift and Shift

A lift and shift migration uses an approach that minimizes downtime potential for your organization. While Microsoft Azure lacks a dedicated Oracle database service in its cloud, you can configure Azure VM to accommodate your databases. You use Azure’s built-in migration tools to migrate your on-premises Oracle databases to the VM seamlessly.

You avoid a significant amount of downtime by configuring the Azure VM and shifting the data over to it before the cutover occurs. While you do have to temporarily pay for running two sets of databases, this approach gives you sufficient time to go through testing and work out any issues.

Database Refactoring

Legacy on-premises Oracle databases may have configurations that are poorly suited to cloud features. While you could lift and shift them as-is to Azure VM, you would end up with a wide range of issues that could result in data loss and unplanned downtime.

In this Oracle migration scenario, refactoring the code before you go through the migration may be the best option. You do have to commit significant time and resources to this project, but you end up with a modernized Oracle database that can take full advantage of the scale, performance, and agility offered by a cloud-based environment.

Database Rearchitecting

Sometimes your Oracle database technology may not be serving your current needs and use cases. Instead of migrating the databases, you can completely rearchitect your database environment. Since database needs can change significantly over the course of an organization’s lifespan, evaluating whether Oracle is the right option to take you into the cloud is important.

Learn more about what your organization needs to do before the migration with our white paper, “Getting Your Organization Ready for Your Oracle Database to Microsoft Azure Migration.”

The post 4 Methods for Migrating Oracle Databases to Microsoft Azure appeared first on Datavail.

Why Companies Are Moving Their Analytics to Azure Cloud

Whether you’re a tiny startup or a massive Fortune 500 firm, cloud analytics has become a business best practice. A 2018 survey by MicroStrategy found that 39 percent of organizations are now running their analytics in the cloud, while another 45 percent are using analytics both in the cloud and on-premises.

As a Microsoft Gold Partner, Datavail has the skills and experience that companies need to make their next Azure cloud analytics migration a success. Below, we’ll discuss both the benefits of Azure cloud analytics, as well as some tips and tricks for companies who are considering a move to the Azure cloud.

The Benefits of Analytics on Azure Cloud

Azure Cloud is the perfect site for many organizations to run their business intelligence and analytics workloads. The advantages of running analytics on Azure Cloud include:

- Lower IT costs:Cloud migrations represent a shift from the capital expenses (CAPEX) to the operating expenses (OPEX) pricing model—that means manageable monthly fees rather than pricey purchases of on-premises hardware and software licenses.

- Increased scalability and flexibility:Scalability is an essential cloud feature to handle the ever-growing amounts of enterprise data at your fingertips. Azure Autoscale helps you dynamically scale your applications to respond to changes in usages and demand.

- Data backup and business continuity:Tools like Azure Backup are essential to protect the integrity and continuity of your business after data loss or disaster.

Tips and Tricks for Moving Analytics to Azure Cloud

While the advantages of analytics on the Azure Cloud are obvious, the roadmap to getting there is less clear. According to a 2017 report, 62 percent of companies with cloud migration projects said that it was “harder than expected,” while 55 percent went over their allotted budget.

Below, we’ll go over some tips, tricks, and best practices for Azure cloud analytics migrations to successfully execute your next project.

- Don’t be afraid to experiment

Moving to cloud analytics can represent a new opportunity for your organization to realign and shake things up. One of Datavail’s clients, a Bay Area restaurant chain, was originally using the Infor Birst BI and analytics software. However, the client found that Infor Birst suffered from problems such as a high learning curve and lagging user adoption.

The client needed a better analytics solution that would provide real-time data and insights across different locations, including a single source of truth. By joining forces with Datavail, the client decided to migrate to Microsoft’s Power BI cloud analytics tool. This switch to the Azure ecosystem—including Power BI, SQL Server, Excel, and Visual Studio—gave the client a common toolset to unify employees across the organization.

- Look for “quick wins”

Another of Datavail’s clients, a retail merchandising partner, had been using an on-premises SQL Server OLTP database that had some serious flaws, from an outdated architecture and performance issues to security vulnerabilities and the lack of a centralized repository for reporting. Datavail suggested that migrating to the Azure cloud would be an obvious next step.

During and after the migration, the client was able to realize substantial improvements thanks to a few easy enhancements, such as:

- Partitioning data to improve scalability and performance.

- Making changes to system design to eliminate deadlocks.

- Evaluating ways to reduce the cost of software licenses.

In addition, Datavail helped the client use services such as Azure Data Factory and Azure Data Lake Storage to plan and prepare for its Power BI cloud migration.

Looking for more tips and tricks? Check out Microsoft’s Azure cloud migration checklist.

How Datavail Can Help

Datavail is a Microsoft Gold Partner, with years of experience helping businesses migrate to the Azure cloud. We have the knowledge and skillset to make your next Azure cloud migration a success. Our suite of cloud migration services includes:

- Selecting the right cloud technologies for your business.

- Completing a cloud readiness assessment of your organization.

- Developing a cloud migration roadmap with an appropriate timeline.

- Creating a total cost of ownership (TCO) estimate and deploying a proof of concept.

- Uplifting analytics solutions from on-premises to the cloud.

- Providing ongoing cloud support, maintenance, and management.

Conclusion

Want to see the benefits of moving your analytics to Azure Cloud for yourself? Get in touch with Datavail’s team of cloud migration experts today for a chat about your business needs and objectives—or download our white paper “Across the Continent with Cloud Analytics” to see how 8 of our clients have leveraged cloud analytics to their advantage.

The post Why Companies Are Moving Their Analytics to Azure Cloud appeared first on Datavail.