Category Archives: Rittman Mead

Rittman Mead at Oracle OpenWorld 2016

The big show is upon us and once again Rittman Mead will be actively participating at Oracle OpenWorld 2016. Oracle's premier conference runs September 18–22 at the Moscone Center in beautiful San Francisco, California. I'm looking forward to another great conference, as well as attending my first ACE Director briefing, where Oracle ACE Directors get to hear future plans on the direction of Oracle products and can provide feedback to product teams (and even ask questions of Thomas Kurian), on the Thursday/Friday prior to the event.

I'm fortunate enough to have been selected by the ODTUG to present a user group forum session on Sunday, September 18th, the first official day of Oracle OpenWorld 2016. I'll be sharing an updated version of my GoldenGate and Kafka integration talk, Oracle GoldenGate and Apache Kafka: A Deep Dive into Real-Time Data Streaming (Session UGF6161), previously presented at Collaborate and KScope16. The focus will be on how to use the new Oracle GoldenGate for Big Data release 12.2 to replicate database transactions to Apache Kafka. The topic of this session, real-time data streaming, provides a great solution for the challenging task of ingesting data from multiple different sources, and different structures, and making that data readily available for others in the organization to use. By combining GoldenGate, Oracle’s premier data replication technology, and Apache Kafka, the latest open-source streaming and messaging system for big data, we can implement a fast, durable, and scalable solution. Stop by Moscone South 301 on Sunday at 10:30 a.m. to check it out!

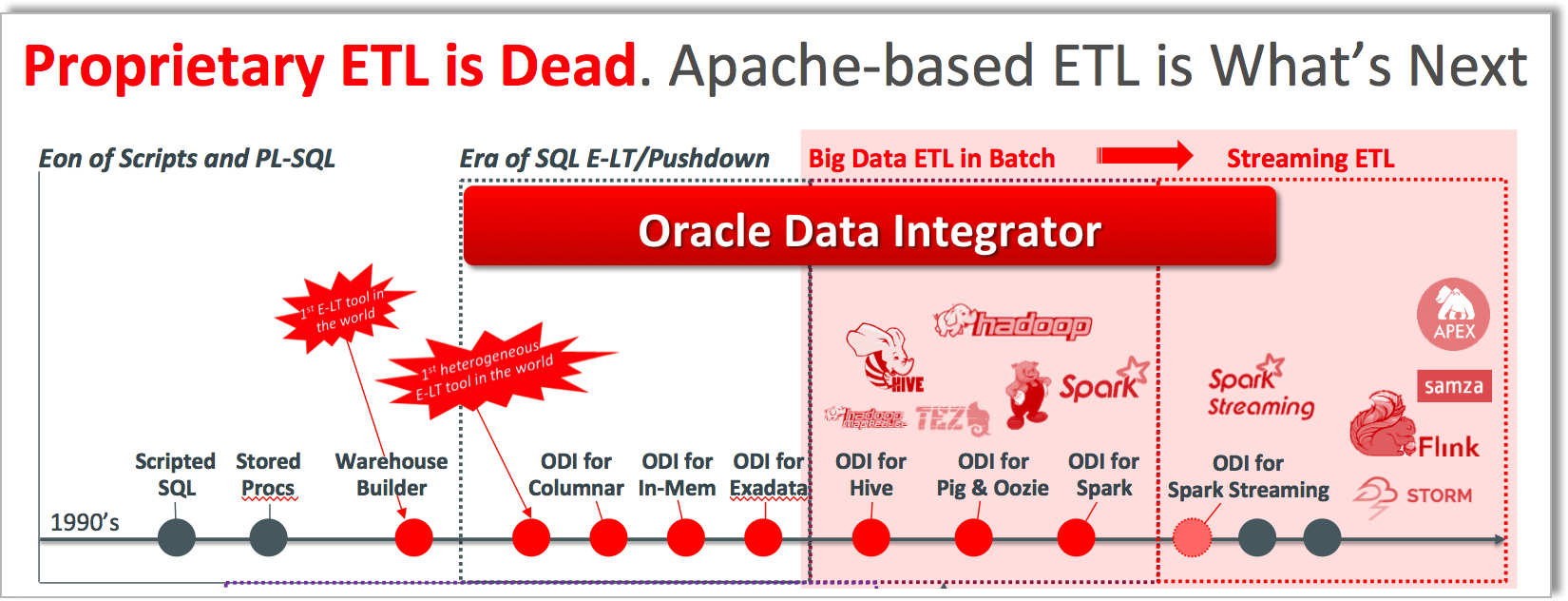

On Tuesday morning, Mark Rittman will join Oracle Data Integrator Product Manager Julien Testut and Oracle A-Team Director Christophe Dupupet to discuss Oracle Data Integration and Big Data in their session titled Oracle Data Integration Platform: A Cornerstone for Big Data (Session CON6624). They'll take a look at how the Oracle Data Integration suite of products (Oracle Data Integrator, Oracle GoldenGate, Oracle Metadata Management, and Oracle Big Data Preparation Cloud Service) can help to avoid the complexity often found in big data initiatives and harness the power of the big data technologies. Join them in Moscone West 2022 on Tuesday, September 20th, at 11 a.m. to learn more!

With many successful Oracle Data Integration and Big Data engagements completed throughout the world, the experts at Rittman Mead continue to lead in the Oracle Big Data space. If you'd like to get together to discuss any of the above topics we're presenting, or anything big data, data integration or BI related, feel free to reach out via email (info@rittmanmead.com), Twitter (@mRainey) or drop a note in the comments below. Hope to see you there!

Using logdump to Troubleshoot the Oracle GoldenGate for Big Data Kafka handler

Oracle GoldenGate for Big Data (OGG BD) supports sending transactions as messages to Kafka topics, both through the native Oracle handler as well as a connector into Confluent's Kafka Connect. In some research that I was doing with it I found an interesting problem that I am going to demonstrate here and show the troubleshooting tools that may be useful to others encountering similar issues.

The source for the data is Swingbench running against Oracle 12c database (pluggable instance). OGG has been configured as follows:

Extract

EXTRACT EXT1 USERID SYSTEM, PASSWORD welcome1 EXTTRAIL ./dirdat/lt SOURCECATALOG ORCL TABLE SOE.*;Datapump (to local machine, an installation of OGG BD)

EXTRACT EXTDP1 RMTHOST LOCALHOST, MGRPORT 7810 RMTTRAIL ./dirdat/rt SOURCECATALOG ORCL TABLE SOE.*;Replicat

REPLICAT rkafka TARGETDB LIBFILE libggjava.so SET property=dirprm/kafka.props REPORTCOUNT EVERY 1 MINUTES, RATE GROUPTRANSOPS 10000 MAP *.*.*, TARGET *.*.*;

When I start the replicat, it abends almost straight away. In ggserr.log I see:

ERROR OGG-15051 Oracle GoldenGate Delivery, rkafka.prm: Java or JNI exception:

oracle.goldengate.util.GGException: Kafka Handler failed to format and process operation: table=[ORCL.SOE.CUSTOMERS], op pos=00000000000000006636, tx pos=00000000000000002437, op ts=2016-09-06 10:59:23.000589.

ERROR OGG-01668 Oracle GoldenGate Delivery, rkafka.prm: PROCESS ABENDING.

Within the properties file for the Kafka handler (dirprm/kafka.props) I increased the logging level

gg.log.level=DEBUG

and restart the replicat. Now we get a debug file written to dirrpt/RKAFKA_debug_log4j.log which includes successful work:

[...]

[main] DEBUG (UserExitDataSource.java:1190) - Received txInd is: WHOLE RBA is: 4939

[main] DEBUG (KafkaHandler.java:484) - Process operation: table=[ORCL.SOE.LOGON], op pos=00000000000000004939, tx pos=00000000000000002437, op ts=2016-09-06 10:59:23.000179

[main] DEBUG (KafkaHandler.java:529) - Creating the producer record and sending to Kafka Producer

[main] DEBUG (NonBlockingKafkaProducer.java:64) - Sending producer record to Non Blocking kafka producer

[main] DEBUG (NonBlockingKafkaProducer.java:76) - NBKP:send(): Returning status: OK

[main] DEBUG (PendingOpGroup.java:316) - now ready to checkpoint? false (was ready? false): {pendingOps=18, groupSize=0, timer=0:00:00.000 [total = 0 ms ]}

[main] DEBUG (UserExitDataSource.java:1401) - applyQueuedConfigurationChanges: on Operation? false

[main] DEBUG (UserExitDataSource.java:2342) - UpdateActivityTime call received

[...]

but then a failure, matching the more high-level message we got previously in ggserr.log:

DEBUG 2016-09-06 15:50:52,909 [main] DEBUG (KafkaHandler.java:484) - Process operation: table=[ORCL.SOE.CUSTOMERS], op pos=00000000000000006636, tx pos=00000000000000002437, op ts=2016-09-06 10:59:23.000589

INFO 2016-09-06 15:50:52,910 [main] INFO (AvroOperationSchemaGenerator.java:36) - Generating the Avro schema for the table [ORCL.SOE.CUSTOMERS].

ERROR 2016-09-06 15:50:52,914 [main] ERROR (AvroOperationFormatter.java:188) - The Avro Formatter formatOp operation failed.

org.apache.avro.SchemaParseException: Illegal character in: SYS_NC00017$

at org.apache.avro.Schema.validateName(Schema.java:1083)

at org.apache.avro.Schema.access$200(Schema.java:79)

at org.apache.avro.Schema$Field.<init>(Schema.java:372)

at org.apache.avro.SchemaBuilder$FieldBuilder.completeField(SchemaBuilder.java:2124)

at org.apache.avro.SchemaBuilder$FieldBuilder.completeField(SchemaBuilder.java:2116)

at org.apache.avro.SchemaBuilder$FieldBuilder.access$5300(SchemaBuilder.java:2034)

So from this we've got the table (ORCL.SOE.CUSTOMERS), log offset (6636), and from the stack trace even a hint at what the issue may be (something to do with the Schema, and a column called SYS_NC00017$).

Now let's see if we can find out more. A colleague of mine pointed me towards Logdump, which is well documented and also covered by Oracle's A-Team blog here.

Launch logdump from the OGG BD folder, ideally using rlwrap so that you can scroll and search through command history:

$ rlwrap ./logdump

Oracle GoldenGate Log File Dump Utility

Version 12.2.0.1.160419 OGGCORE_12.2.0.1.0OGGBP_PLATFORMS_160430.1401

Copyright (C) 1995, 2016, Oracle and/or its affiliates. All rights reserved.

Logdump 1 >

Then enter the following, which will determine what information is shown:

Show record headers

GHDR ONShow detailed column data, in both hex and ASCII

DETAIL ON DETAIL DATA

After that, specify the trail file to be examined:

OPEN /u01/ogg-bd/dirdat/rt000000000

You can enter next (or simply n) to view the records one at a time:

Logdump 6 >OPEN /u01/ogg-bd/dirdat/rt000000000

Current LogTrail is /u01/ogg-bd/dirdat/rt000000000

Logdump 7 >n

2016/09/02 15:54:48.329.147 FileHeader Len 1451 RBA 0

Name: *FileHeader*

3000 0338 3000 0008 4747 0d0a 544c 0a0d 3100 0002 | 0..80...GG..TL..1...

0005 3200 0004 2000 0000 3300 0008 02f2 61ba f7c1 | ..2... ...3.....a...

f3bb 3400 002d 002b 7572 693a 6269 6764 6174 616c | ..4..-.+uri:bigdatal

6974 653a 6c6f 6361 6c64 6f6d 6169 6e3a 3a75 3031 | ite:localdomain::u01

3a6f 6767 3a45 5854 4450 3135 0000 2f35 0000 2b00 | :ogg:EXTDP15../5..+.

2975 7269 3a62 6967 6461 7461 6c69 7465 3a6c 6f63 | )uri:bigdatalite:loc

616c 646f 6d61 696e 3a3a 7530 313a 6f67 673a 4558 | aldomain::u01:ogg:EX

Logdump 8 >n

-------------------------------------------------------------------

Hdr-Ind : E (x45) Partition : . (x00)

UndoFlag : . (x00) BeforeAfter: A (x41)

RecLength : 0 (x0000) IO Time : 2016/09/02 15:54:47.562.301

IOType : 151 (x97) OrigNode : 0 (x00)

TransInd : . (x03) FormatType : R (x52)

SyskeyLen : 0 (x00) Incomplete : . (x00)

AuditRBA : 0 AuditPos : 0

Continued : N (x00) RecCount : 0 (x00)

2016/09/02 15:54:47.562.301 RestartOK Len 0 RBA 1459

Name:

After Image: Partition 0 G s

GGS tokens:

4e00 0004 4558 5431 | N...EXT1

But ploughing through the file a transaction at a time is no fun, so lets zero-in on the problem record. We can either just jump straight to the transaction offset that we got from the error log using POSITION (or POS) followed by NEXT:

Logdump 12 >pos 6636

Reading forward from RBA 6636

Logdump 13 >n

-------------------------------------------------------------------

Hdr-Ind : E (x45) Partition : . (x0c)

UndoFlag : . (x00) BeforeAfter: A (x41)

RecLength : 256 (x0100) IO Time : 2016/09/06 11:59:23.000.589

IOType : 5 (x05) OrigNode : 255 (xff)

TransInd : . (x00) FormatType : R (x52)

SyskeyLen : 0 (x00) Incomplete : . (x00)

AuditRBA : 393 AuditPos : 30266384

Continued : N (x00) RecCount : 1 (x01)

2016/09/06 11:59:23.000.589 Insert Len 256 RBA 6636

Name: ORCL.SOE.CUSTOMERS (TDR Index: 3)

After Image: Partition 12 G b

0000 000a 0000 0000 0000 0001 86a1 0001 000a 0000 | ....................

0006 616e 7477 616e 0002 000b 0000 0007 7361 6d70 | ..antwan........samp

736f 6e00 0300 0600 0000 0275 7300 0400 0b00 0000 | son........us.......

0741 4d45 5249 4341 0005 000a 0000 0000 0000 0000 | .AMERICA............

8980 0006 001d 0000 0019 616e 7477 616e 2e73 616d | ..........antwan.sam

7073 6f6e 406f 7261 636c 652e 636f 6d00 0700 0a00 | pson@oracle.com.....

0000 0000 0000 0000 9500 0800 1500 0032 3031 362d | ...............2016-

Column 0 (x0000), Len 10 (x000a)

0000 0000 0000 0001 86a1 | ..........

Column 1 (x0001), Len 10 (x000a)

0000 0006 616e 7477 616e | ....antwan

Column 2 (x0002), Len 11 (x000b)

0000 0007 7361 6d70 736f 6e | ....sampson

Column 3 (x0003), Len 6 (x0006)

0000 0002 7573 | ....us

Column 4 (x0004), Len 11 (x000b)

0000 0007 414d 4552 4943 41 | ....AMERICA

Column 5 (x0005), Len 10 (x000a)

0000 0000 0000 0000 8980 | ..........

Column 6 (x0006), Len 29 (x001d)

0000 0019 616e 7477 616e 2e73 616d 7073 6f6e 406f | ....antwan.sampson@o

7261 636c 652e 636f 6d | racle.com

Column 7 (x0007), Len 10 (x000a)

0000 0000 0000 0000 0095 | ..........

Column 8 (x0008), Len 21 (x0015)

0000 3230 3136 2d30 392d 3036 3a30 303a 3030 3a30 | ..2016-09-06:00:00:0

30 | 0

Column 9 (x0009), Len 14 (x000e)

0000 000a 4f63 6361 7369 6f6e 616c | ....Occasional

Column 10 (x000a), Len 9 (x0009)

0000 0005 4d75 7369 63 | ....Music

Column 11 (x000b), Len 21 (x0015)

0000 3139 3635 2d30 352d 3130 3a30 303a 3030 3a30 | ..1965-05-10:00:00:0

30 | 0

Column 12 (x000c), Len 5 (x0005)

0000 0001 59 | ....Y

Column 13 (x000d), Len 5 (x0005)

0000 0001 4e | ....N

Column 14 (x000e), Len 10 (x000a)

0000 0000 0000 0002 49f1 | ........I.

Column 15 (x000f), Len 10 (x000a)

0000 0000 0000 0002 49f1 | ........I.

or we can also use the FILTER command, but we'll come back to that in a moment. First let's have a look at the record in question that's causing the Kafka handler to abend. It's shown in full above.

The table name matches - ORCL.SOE.CUSTOMERS, and we can see that the operation was an INSERT along with the values for sixteen columns. Now, since we know that the error thrown by the Kafka handler was something to do with schema and columns, let's take a step back. The record we're looking at is the actual data record, but in the trail file will also be metadata about the table itself which will have been read by the handler. We can look for all records in the trail file relating to this table using the FILTER command (preceeded by a POS 0 to move the read back to the beginning of the file):

Logdump 37 >POS 0

Reading forward from RBA 0

Logdump 38 >FILTER INCLUDE FILENAME ORCL.SOE.CUSTOMERS

Logdump 39 >N

-------------------------------------------------------------------

Hdr-Ind : E (x45) Partition : . (x00)

UndoFlag : . (x00) BeforeAfter: A (x41)

RecLength : 1464 (x05b8) IO Time : 2016/09/06 11:59:26.461.886

IOType : 170 (xaa) OrigNode : 2 (x02)

TransInd : . (x03) FormatType : R (x52)

SyskeyLen : 0 (x00) Incomplete : . (x00)

DDR/TDR Idx: (002, 003) AuditPos : 30266384

Continued : N (x00) RecCount : 1 (x01)

2016/09/06 11:59:26.461.886 Metadata Len 1464 RBA 5103

Name: ORCL.SOE.CUSTOMERS

*

1)Name 2)Data Type 3)External Length 4)Fetch Offset 5)Scale 6)Level

7)Null 8)Bump if Odd 9)Internal Length 10)Binary Length 11)Table Length 12)Most Sig DT

13)Least Sig DT 14)High Precision 15)Low Precision 16)Elementary Item 17)Occurs 18)Key Column

19)Sub DataType 20)Native DataType 21)Character Set 22)Character Length 23)LOB Type 24)Partial Type

*

TDR version: 1

Definition for table ORCL.SOE.CUSTOMERS

Record Length: 542

Columns: 18

CUSTOMER_ID 134 13 0 0 0 1 0 8 8 8 0 0 0 0 1 0 1 3 2 -1 0 0 0

CUST_FIRST_NAME 64 40 12 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

CUST_LAST_NAME 64 40 58 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

NLS_LANGUAGE 64 3 104 0 0 1 0 3 3 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

NLS_TERRITORY 64 30 112 0 0 1 0 30 30 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

CREDIT_LIMIT 134 11 148 2 0 1 0 8 8 8 0 0 0 0 1 0 0 3 2 -1 0 0 0

CUST_EMAIL 64 100 160 0 0 1 0 100 100 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

ACCOUNT_MGR_ID 134 13 266 0 0 1 0 8 8 8 0 0 0 0 1 0 0 3 2 -1 0 0 0

CUSTOMER_SINCE 192 19 278 0 0 1 0 19 19 19 0 5 0 0 1 0 0 0 12 -1 0 0 0

CUSTOMER_CLASS 64 40 300 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

SUGGESTIONS 64 40 346 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

DOB 192 19 392 0 0 1 0 19 19 19 0 5 0 0 1 0 0 0 12 -1 0 0 0

MAILSHOT 64 1 414 0 0 1 0 1 1 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

PARTNER_MAILSHOT 64 1 420 0 0 1 0 1 1 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

PREFERRED_ADDRESS 134 13 426 0 0 1 0 8 8 8 0 0 0 0 1 0 0 3 2 -1 0 0 0

PREFERRED_CARD 134 13 438 0 0 1 0 8 8 8 0 0 0 0 1 0 0 3 2 -1 0 0 0

SYS_NC00017$ 64 40 450 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

SYS_NC00018$ 64 40 496 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

End of definition

I spy with my little eye ... SYS_NC00017$, which was named in the debug log that we saw above. Also note:

Columns: 18

So the OGG metadata for the table shows it with eighteen columns, including two SYS_[...]. If you look at the data shown in the record at position 6636 above you'll see that there are only sixteen columns of data. Let's now check out the schema for the table in question in Oracle.

SQL> select COLUMN_NAME,DATA_TYPE from user_tab_columns where table_name = 'CUSTOMERS';

COLUMN_NAME DATA_TYPE

-------------------- ----------------

PREFERRED_CARD NUMBER

PREFERRED_ADDRESS NUMBER

PARTNER_MAILSHOT VARCHAR2

MAILSHOT VARCHAR2

DOB DATE

SUGGESTIONS VARCHAR2

CUSTOMER_CLASS VARCHAR2

CUSTOMER_SINCE DATE

ACCOUNT_MGR_ID NUMBER

CUST_EMAIL VARCHAR2

CREDIT_LIMIT NUMBER

NLS_TERRITORY VARCHAR2

NLS_LANGUAGE VARCHAR2

CUST_LAST_NAME VARCHAR2

CUST_FIRST_NAME VARCHAR2

CUSTOMER_ID NUMBER

16 rows selected.

Sixteen columns. Not eighteen, as the OGG trail file Metadata record showed. Hmmm.

Interestingly, Google throws up a match for this very column in which the output of Dbvisit's replicate tool run against the Swingbench schema announces:

Column SYS_NC00017$ is special: virtual column. Excluding.

Column SYS_NC00017$ is special: hidden column. Excluding.

Column SYS_NC00017$ is special: system-generated column. Excluding.

That it's a hidden column we'd pretty much guessed given its elusiveness. But - virtual column? system generated? This then prompted me to look at the indices on the table:

SQL> SELECT TABLE_NAME, INDEX_NAME, COLUMN_NAME

FROM USER_IND_COLUMNS

WHERE TABLE_NAME = 'CUSTOMERS';

TABLE_NAME INDEX_NAME COLUMN_NAME

---------------- -------------------------------- --------------------

CUSTOMERS CUST_ACCOUNT_MANAGER_IX ACCOUNT_MGR_ID

CUSTOMERS CUST_EMAIL_IX CUST_EMAIL

CUSTOMERS CUST_FUNC_LOWER_NAME_IX SYS_NC00017$

CUSTOMERS CUST_FUNC_LOWER_NAME_IX SYS_NC00018$

CUSTOMERS CUSTOMERS_PK CUSTOMER_ID

CUSTOMERS CUST_DOB_IX DOB

Aha! I spy system generated columns! Let's take a closer look at the CUST_FUNC_LOWER_NAME_IX index:

SQL> SELECT INDEX_NAME, INDEX_TYPE

FROM USER_INDEXES

WHERE TABLE_NAME = 'CUSTOMERS'

AND INDEX_NAME='CUST_FUNC_LOWER_NAME_IX';

INDEX_NAME INDEX_TYPE

-------------------------------- ---------------------------

CUST_FUNC_LOWER_NAME_IX FUNCTION-BASED NORMAL

So we have a function-based index, which in the background appears to implement itself via two hidden columns. My guess is that the Kafka handler code is taking the metadata definition record of 18 columns too literally, and expecting to find a value for it in the transaction record when it reads it and falls over when it can't. Similar behaviour happens with the Kafka Connect OGG connector when it tries to process this particular record:

ERROR 2016-08-30 17:25:09,548 [main] ERROR (KafkaConnectFormatter.java:251) - The Kafka Connect Row Formatter formatOp operation failed.

java.lang.IndexOutOfBoundsException: Index: 16, Size: 16

at java.util.ArrayList.rangeCheck(ArrayList.java:653)

at java.util.ArrayList.get(ArrayList.java:429)

at oracle.goldengate.datasource.meta.TableMetaData.getColumnMetaData(TableMetaData.java:73)

at oracle.goldengate.kafkaconnect.formatter.KafkaConnectFormatter.formatAfterValues(KafkaConnectFormatter.java:329)

at oracle.goldengate.kafkaconnect.formatter.KafkaConnectFormatter.formatAfterValuesOp(KafkaConnectFormatter.java:278)

at oracle.goldengate.kafkaconnect.formatter.KafkaConnectFormatter.formatOp(KafkaConnectFormatter.java:212)

at oracle.goldengate.kafkaconnect.KafkaConnectHandler.formatOp(KafkaConnectHandler.java:309)

at oracle.goldengate.kafkaconnect.KafkaConnectHandler.transactionCommit(KafkaConnectHandler.java:186)

at oracle.goldengate.datasource.DsEventManager$2.send(DsEventManager.java:414)

at oracle.goldengate.datasource.DsEventManager$EventDispatcher.distributeEvent(DsEventManager.java:231)

at oracle.goldengate.datasource.DsEventManager.fireTransactionCommit(DsEventManager.java:422)

at oracle.goldengate.datasource.AbstractDataSource.fireTransactionCommit(AbstractDataSource.java:490)

at oracle.goldengate.datasource.UserExitDataSource.commitActiveTransaction(UserExitDataSource.java:1582)

at oracle.goldengate.datasource.UserExitDataSource.commitTx(UserExitDataSource.java:1525)

ERROR 2016-08-30 17:25:09,550 [main] ERROR (KafkaConnectHandler.java:312) - Confluent Kafka Handler failed to format and process operation: table=[PDB.SOE.CUSTOMERS], op pos=00000000000000008091, tx pos=00000000000000003011, op ts=2016-07-29 14:59:47.000137

java.lang.IndexOutOfBoundsException: Index: 16, Size: 16

at java.util.ArrayList.rangeCheck(ArrayList.java:653)

at java.util.ArrayList.get(ArrayList.java:429)

at oracle.goldengate.datasource.meta.TableMetaData.getColumnMetaData(TableMetaData.java:73)

Note the IndexOutOfBoundsException error.

Working around the error

I'm in the fortunate position of being in a sandbox environment in which I can modify the source schema to suit my needs - so I just dropped the function-based index. In reality this evidently would not be a good approach on the assumption that the index was there for a good reason!

DROP INDEX "SOE"."CUST_FUNC_LOWER_NAME_IX";

Having run this, we still have the question of how to get the replicat working. To do this we could go the whole-hog and drop and recreate the extracts; or, we can get the replicat to skip the section of the trail file with the records in that we can't process. Assuming you've run the above DROP and then written more data to the table, there'll be a second metadata record in the OGG trail file. We can use the FILTER command to find this:

Logdump 69 >FILTER INCLUDE FILENAME ORCL.SOE.CUSTOMERS;FILTER EXCLUDE RECTYPE 5,134;FILTER MATCH ALL

This shows records for just this table, and excludes record types 5 and 134 (INSERT and UPDATE respectively). We can then scan through the file with NEXT command and see:

Logdump 72 >n

Scanned 10000 records, RBA 2365691, 2016/09/06 12:12:16.001.191

Scanned 20000 records, RBA 4716374, 2016/09/06 14:48:54.971.161

Scanned 30000 records, RBA 7067022, 2016/09/06 14:57:34.000.170

Scanned 40000 records, RBA 9413177, 2016/09/06 15:07:41.000.186

Scanned 50000 records, RBA 11773709, 2016/09/06 15:16:07.000.594

Scanned 60000 records, RBA 14126750, 2016/09/06 15:24:38.001.063

-------------------------------------------------------------------

Hdr-Ind : E (x45) Partition : . (x00)

UndoFlag : . (x00) BeforeAfter: A (x41)

RecLength : 1308 (x051c) IO Time : 2016/09/06 17:11:21.717.818

IOType : 170 (xaa) OrigNode : 2 (x02)

TransInd : . (x03) FormatType : R (x52)

SyskeyLen : 0 (x00) Incomplete : . (x00)

DDR/TDR Idx: (002, 009) AuditPos : 9986576

Continued : N (x00) RecCount : 1 (x01)

2016/09/06 17:11:21.717.818 Metadata Len 1308 RBA 14702330

Name: ORCL.SOE.CUSTOMERS

*

1)Name 2)Data Type 3)External Length 4)Fetch Offset 5)Scale 6)Level

7)Null 8)Bump if Odd 9)Internal Length 10)Binary Length 11)Table Length 12)Most Sig DT

13)Least Sig DT 14)High Precision 15)Low Precision 16)Elementary Item 17)Occurs 18)Key Column

19)Sub DataType 20)Native DataType 21)Character Set 22)Character Length 23)LOB Type 24)Partial Type

*

TDR version: 1

Definition for table ORCL.SOE.CUSTOMERS

Record Length: 450

Columns: 16

CUSTOMER_ID 134 13 0 0 0 1 0 8 8 8 0 0 0 0 1 0 1 3 2 -1 0 0 0

CUST_FIRST_NAME 64 40 12 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

CUST_LAST_NAME 64 40 58 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

NLS_LANGUAGE 64 3 104 0 0 1 0 3 3 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

NLS_TERRITORY 64 30 112 0 0 1 0 30 30 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

CREDIT_LIMIT 134 11 148 2 0 1 0 8 8 8 0 0 0 0 1 0 0 3 2 -1 0 0 0

CUST_EMAIL 64 100 160 0 0 1 0 100 100 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

ACCOUNT_MGR_ID 134 13 266 0 0 1 0 8 8 8 0 0 0 0 1 0 0 3 2 -1 0 0 0

CUSTOMER_SINCE 192 19 278 0 0 1 0 19 19 19 0 5 0 0 1 0 0 0 12 -1 0 0 0

CUSTOMER_CLASS 64 40 300 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

SUGGESTIONS 64 40 346 0 0 1 0 40 40 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

DOB 192 19 392 0 0 1 0 19 19 19 0 5 0 0 1 0 0 0 12 -1 0 0 0

MAILSHOT 64 1 414 0 0 1 0 1 1 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

PARTNER_MAILSHOT 64 1 420 0 0 1 0 1 1 0 0 0 0 0 1 0 0 0 1 -1 0 0 0

PREFERRED_ADDRESS 134 13 426 0 0 1 0 8 8 8 0 0 0 0 1 0 0 3 2 -1 0 0 0

PREFERRED_CARD 134 13 438 0 0 1 0 8 8 8 0 0 0 0 1 0 0 3 2 -1 0 0 0

End of definition

Filtering suppressed 62444 records

Here's the new table metadata, for sixten columns only and minus the SYS_[...] columns. Its position as shown in the record above is RBA 14702330. To get the commit sequence number (CSN), which we can use to restart the replicat, we need to enable the display of OGG-generated data in the records (ref):

GGSTOKEN ON

GGSTOKEN DETAIL

The Metadata record itself doesn't have a CSN, so disable the filtering

FILTER OFF

and then go to the next record

Logdump 123 >FILTER OFF

Logdump 124 >N

-------------------------------------------------------------------

Hdr-Ind : E (x45) Partition : . (x0c)

UndoFlag : . (x00) BeforeAfter: A (x41)

RecLength : 255 (x00ff) IO Time : 2016/09/06 17:11:18.000.200

IOType : 5 (x05) OrigNode : 255 (xff)

TransInd : . (x00) FormatType : R (x52)

SyskeyLen : 0 (x00) Incomplete : . (x00)

AuditRBA : 396 AuditPos : 9986576

Continued : N (x00) RecCount : 1 (x01)

2016/09/06 17:11:18.000.200 Insert Len 255 RBA 14703707

Name: ORCL.SOE.CUSTOMERS (TDR Index: 9)

After Image: Partition 12 G b

0000 000a 0000 0000 0000 0009 27c1 0001 000b 0000 | ............'.......

0007 6775 7374 6176 6f00 0200 0a00 0000 0663 6173 | ..gustavo........cas

[...]

GGS tokens:

TokenID x52 'R' ORAROWID Info x00 Length 20

4141 4166 632f 4141 4141 4141 434d 6541 4162 0001 | AAAfc/AAAAAACMeAAb..

TokenID x4c 'L' LOGCSN Info x00 Length 8

3131 3637 3235 3433 | 11672543

TokenID x36 '6' TRANID Info x00 Length 9

3236 2e32 372e 3139 35 | 26.27.195

TokenID x69 'i' ORATHREADID Info x01 Length 2

0001 | ..

It's an INSERT record for our table, with the LOGCSN shown as 11672543.

So if we're happy to ditch all the data in the trail file since it was set up until the point at which we 'fixed' the virtual column issue, we can run in GGSCI:

GGSCI (bigdatalite.localdomain) 44> start rkafka atcsn 0.11672543

Sending START request to MANAGER ...

REPLICAT RKAFKA starting

GGSCI (bigdatalite.localdomain) 49> info rkafka

REPLICAT RKAFKA Last Started 2016-09-06 17:32 Status RUNNING

Checkpoint Lag 00:00:00 (updated 00:00:09 ago)

Process ID 25860

Log Read Checkpoint File ./dirdat/rt000000000

2016-09-06 17:11:22.000764 RBA 14724721

and over in Kafka itself we can now see the records coming through:

$ kafka-console-consumer --zookeeper localhost --topic ORCL.SOE.LOGON

ORCL.SOE.LOGONI42016-09-02 14:56:26.00041142016-09-06T15:50:52.194000(00000000000000002010LOGON_IDCUSTOMER_IDLOGON_DATE4242&2016-09-02:15:56:25

ORCL.SOE.LOGONI42016-09-05 14:39:02.00040942016-09-06T15:50:52.875000(00000000000000002437LOGON_IDCUSTOMER_IDLOGON_DATE4242&2016-09-05:15:39:00

ORCL.SOE.LOGONI42016-09-05 14:44:15.00046042016-09-06T15:50:52.877000(00000000000000002593LOGON_IDCUSTOMER_IDLOGON_DATE4242&2016-09-05:15:44:13

ORCL.SOE.LOGONI42016-09-05 14:46:16.00043642016-09-06T15:50:52.879000(00000000000000002748LOGON_IDCUSTOMER_IDLOGON_DATE4242&2016-09-05:15:46:11

ORCL.SOE.LOGONI42016-09-05 16:17:25.00014242016-09-06T15:50:52.881000(00000000000000002903LOGON_IDCUSTOMER_IDLOGON_DATE4242&2016-09-05:17:17:24

ORCL.SOE.LOGONI42016-09-05 16:22:38.00040142016-09-06T15:50:52.883000(00000000000000003058LOGON_IDCUSTOMER_IDLOGON_DATE4242&2016-09-05:17:22:37

ORCL.SOE.LOGONI42016-09-05 16:25:16.00015142016-09-06T15:50:52.885000(00000000000000003215LOGON_IDCUSTOMER_IDLOGON_DATE4242&2016-09-05:17:25:16

ORCL.SOE.LOGONI42016-09-05 16:26:25.00017542016-09-06T15:50:52.886000(00000000000000003372LOGON_IDCUSTOMER_IDLOGON_DATE4242&2016-09-05:17:26:25

ORCL.SOE.LOGONI42016-09-05 16:27:20.00018642016-09-06T15:50:52.888000(00000000000000003527LOGON_IDCUSTOMER_IDLOGON_DATE4242&2016-09-05:17:27:19

A Better Workaround?

Per Handling Other Database Properties, virtual columns can be handled by using the TABLE FETCHCOLS configuration on the extract to read the virtual values and MAP of the replicat to map them to actual columns on the target. Unfortunately, the system-generated column name isn't accepted by OGG in the FETCHCOLS syntax:

INFO OGG-06507 Oracle GoldenGate Capture for Oracle, ext1.prm: MAP (TABLE) resolved (entry ORCL.SOE.CUSTOMERS): TABLE "ORCL"."SOE"."CUSTOMERS", FETCHCOLS(SYS_NC00017$).

ERROR OGG-00366 Oracle GoldenGate Capture for Oracle, ext1.prm: Invalid column specified in FETCHCOLS/FETCHCOLSEXCEPT: SYS_NC00017$.

ERROR OGG-01668 Oracle GoldenGate Capture for Oracle, ext1.prm: PROCESS ABENDING.

Another tack to try, given that in our case we simply want to make sure the virtual columns don't get picked up at all - is to try and ignore the column altogether. Unfortunately from my experimentation with COLSEXCEPT it appears that OGG excludes specified columns from record data, but not the initial metadata (which is what causes the above problems in the first place). Even if this had worked, COLSEXCEPT doesn't like the system-generated column name, abending the Extract process with:

INFO OGG-06507 Oracle GoldenGate Capture for Oracle, ext1.prm: MAP (TABLE) resolved (entry ORCL.SOE.CUSTOMERS): TABLE "ORCL"."SOE"."CUSTOMERS", COLSEXCEPT(SYS_NC00017$).

ERROR OGG-00366 Oracle GoldenGate Capture for Oracle, ext1.prm: Invalid column specified in COLS/COLSEXCEPT: SYS_NC00017$.

ERROR OGG-01668 Oracle GoldenGate Capture for Oracle, ext1.prm: PROCESS ABENDING.

Conclusion

Oracle GoldenGate is a fantastic way to stream changes from many different RDBMS to a variety of targets, including Kafka. The potential that this offers in terms of data integration and pipelines is great. This post has hopefully shed a little bit of light on how to go about troubleshooting issues that can occur when using this set of tools. Do let me know in the comments below if you have better suggestions for how to deal with the virtual columns created as a result of the function-based index!

Oracle Data Integrator: Upgrade to ODI 12c – A Case Study

We don’t often get a chance to share our customer success stories due to various reasons, such as required anonymity of the client or certain other legalities. But when we do, it sure feels great! Recently, Eurocontrol, a longtime Rittman Mead client in Brussels, Belgium, performed an upgrade of Oracle Data Integrator from 11g to 12c.  With the help of Rittman Mead, and specifically data integration expert Jérôme Françoisse, Eurocontrol was able to perform the upgrade successfully without any downtime. The upgrade process, and outcome, caught the attention of the Oracle Data Integration product management team, prompting the case study titled “Eurocontrol Improves ETL Development Time-to-Market After Upgrading to Oracle Data Integrator 12c” to be written and shared with the world.

With the help of Rittman Mead, and specifically data integration expert Jérôme Françoisse, Eurocontrol was able to perform the upgrade successfully without any downtime. The upgrade process, and outcome, caught the attention of the Oracle Data Integration product management team, prompting the case study titled “Eurocontrol Improves ETL Development Time-to-Market After Upgrading to Oracle Data Integrator 12c” to be written and shared with the world.

Eurocontrol is the European organization for the safety of air navigation, handling management of the air traffic network, route charging, and working with other organizations to build a Single European Sky. Due to the critical air traffic demand data flowing through their ETL system, the upgrade had to be completed quickly, efficiently, and with minimal downtime. With Rittman Mead’s Oracle Data Integrator expertise, there was no issue exceeding these expectations. Beyond performing the upgrade, Jérôme and team also modified Groovy scripts, custom Knowledge Modules and other objects to work with the updated ODI SDK, and helped perform a minor cleanup of the mapping objects.

Eurocontrol can now take full advantage of the latest and greatest features that Oracle Data Integrator 12c has to offer. From flow-based mappings and component KMs to deployment specifications and reusable mappings, ODI 12c is being used in full-force. Even more impressive, Eurocontrol has not had any unplanned ODI agent downtime since the upgrade!

If your company is planning an upgrade of Oracle Data Integrator 11g to the latest version ODI 12c, as Eurocontrol did, go ahead and give Rittman Mead a shout at info@rittmanmead.com and let’s see how we can help you along the way.

Becky’s BI Apps Corner: OBIA Back-to-Beginnings – Naming Conventions and Jargon

It's easy to talk about a technology using only jargon. It's much harder to talk about a technology without using jargon. I have seen many meetings between business and IT break down because of this communication barrier. I find it more discouraging when I see this communication breakdown happen between advanced IT staff and new IT staff. For those of us in any technological field, it's easy to forget how long it took to learn all of the ins and outs, the terminology and jargon.

During a recent project, I had another consultant shadowing me to get experience with OBIA. (Hi, Julia!) I was 'lettering' a lot so I decided it was time to diagram my jargon. My scribbles on a whiteboard gave me the idea that it might be helpful to do a bit of connecting the dots between OBIA and data warehousing jargon and naming conventions used in OBIA.

BI Applications Load Plan phases:

SDE - Source Dependent Extract

SDE is the first phase in the ETL process that loads source data into the staging area. SDE tasks are source database specific. SDE mappings that run in the load plan will load staging tables. These tables end with _DS and _FS among others.

SIL - Source Independent Load

SIL is the second phase in the ETL process that takes the staged data from the staging tables and loads or transforms them into the target tables. SILOS mappings that run in the load plan will load dimension and fact tables. These tables end with _D and _F among others.

PLP - Post Load Process

This third and final phase in the ETL process occurs after the target tables have been loaded and is commonly used for loading aggregate fact tables. PLP mappings that run in the load plan will load aggregate tables ending with _A. Aggregate tables are often fact table data that has been summed up by a common dimension. For example, a common report might look at finance data by the month. Using the aggregate tables by fiscal period would help improve reporting response time.

For further information about any of the other table types, be sure to read Table Types for Oracle Business Analytics Warehouse. Additionally, this page has probably the best explanation for staging tables and incremental loads.

Source System Acronyms

Since the SDE tasks are source database specific, the SDE mappings' names also include an acronym for the source system in the mapping name. Below are the supported source database systems and the acronyms used in the names and an example for each.

Oracle E-Business Suite - ORA

SDE_ORA_DomainGeneral_Currency

Oracle Siebel - SBL

SDE_SBL_DOMAINGENERAL_CURRENCY

JD Edwards Enterprise One - JDEE

SDE_JDE_DomainGeneral_Currency

PeopleSoft - PSFT

SDE_PSFT_DomainGeneral_Currency_FINSCM

Oracle Fusion Applications - FUSION

SDE_FUSION_DomainGeneral_Currency

Taleo - TLO

SDE_TLO_DomainGeneral_Country

Oracle Service Cloud - RNCX

SDE_RNCX_DomainGeneral

Universal - Universal

SDE_Universal_DomainGeneral

This wraps up our quick "Back-to-Beginnings" refresher on naming conventions and the jargon used in relation to ETL and mappings. Let me know in the comments below if there are other topics you would like me to cover in my "Back-to-Beginnings" series. As always, be sure to check out our available training, which now includes remote training options, and our On Demand Training Beta Program. For my next post I'll be covering two new features in OBIA 11.1.1.10.2, Health Check and ETL Diagnostics, which are the missing pieces you didn't know you've been waiting for.

How To Poke Around OBIEE on Linux with strace (Working with Unsupported ODBC Sources in OBIEE 12c)

OBIEE 12c (and 11g and 10g before it) supports three primary ways of connecting to data sources:

- Native Gateway, such as OCI for Oracle. This is always the preferred option as it gives the greatest support and performance.

- Data Direct ODBC Drivers, a set of which are bundled with OBIEE for enabling connectivity to sources such as SQL Server, MySQL, Hive, and Impala. The configuration of these is documented in the OBIEE manuals and is generally a supported configuration.

- Native ODBC Drivers.

There's also kind of a fourth option, which is JDBC, as explained by Fiston in this excellent post. It's OBIEE 12c only and not fully documented/supported.

In this article we're going to dig into the third option (native ODBC) and look at how it can be used, and also how to troubleshoot it (and the OBIEE stack in general) on Linux.

ODBC (Open Database Connectivity) is a documented API designed to enable applications to work with databases, without one explicitly supporting the other. A comparable protocol is JDBC, which is also widely used (but as yet not fully supported/documented within OBIEE). To use native ODBC drivers with OBIEE on *nix, you install the driver on your OBIEE server and then configure OBIEE to use it. Mark Rittman wrote an example of how to do this with the original Hive ODBC drivers here (before they were formally bundled with OBIEE), and the manual shows how to use it with a native driver for Teradata.

My interest in this is the Apache Drill tool, which enables querying with SQL against a multitude of datasources, including things like JSON files, Hive tables, RDBMS, and more. MapR have released an ODBC driver for it, and I thought it'd be neat to get Apache Drill to work with OBIEE. It evidently does work for OBIEE running on Windows, but I wanted to be able to use it on my standard environment, Linux. This blog post is a warts-and-all exploration of the process I went through to get it to work, since I thought it might be of interest to see some of the forensic methods available when trying to get things to work. For just the headlines, see Using Apache Drill with OBIEE 12c.

First Things First - Setting Up Apache Drill

Drill can be deployed in distributed configuration (with all the parallel processing goodness which that brings), but also run as a single instance locally. For the sake of simplicity that's what I'm going to do here. It's rather easy to do:

# Download Apache Drill

wget http://www.apache.org/dyn/closer.cgi/drill/drill-1.7.0/apache-drill-1.7.0.tar.gz

# Unpack

tar -xvf apache-drill-1.7.0.tar.gz

# Run

cd /opt/apache-drill-1.7.0/ && bin/sqlline -u jdbc:drill:zk=local

You need to make sure you've got a recent JDK available, and if you're running it on SampleApp, watch out for this odd problem that I had which was related to classpaths and manifested itself with the error java.lang.NoSuchMethodError: com.fasterxml.jackson.databind.JavaType.isReferenceType()Z.

All being well, you'll now have a Drill prompt:

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Aug 09, 2016 5:51:43 AM org.glassfish.jersey.server.ApplicationHandler initialize

INFO: Initiating Jersey application, version Jersey: 2.8 2014-04-29 01:25:26...

apache drill 1.7.0

"say hello to my little drill"

0: jdbc:drill:zk=local>

From here you can run a simple query to check the version:

0: jdbc:drill:zk=local> SELECT version FROM sys.version;

+----------+

| version |

+----------+

| 1.7.0 |

+----------+

1 row selected (0.392 seconds)

or query one of the built-in sample data sets:

0: jdbc:drill:zk=local> select count(*) from cp.`employee.json`;

+---------+

| EXPR$0 |

+---------+

| 1155 |

+---------+

1 row selected (0.977 seconds)

For more examples of Drill, see the tutorials.

Setting up Drill ODBC on Linux

With Drill setup and running locally, let's now install the ODBC driver. This is all on SampleApp v511 / Oracle Linux 6.7.

sudo rpm -i http://package.mapr.com/tools/MapR-ODBC/MapR_Drill/MapRDrill_odbc_v1.2.1.1000/MapRDrillODBC-1.2.1.x86_64.rpm

Per the system requirements, we need to make sure that we're using one of the supported ODBC Driver Managers, so we'll install iODBC to start with (the other option being unixODBC):

sudo yum install -y unixodbc

Now follow the configuration instructions. To start with we'll do this in isolation of OBIEE to check that it works, and then bring it into OBIEE's world (for example, odbc.ini already exists in OBIEE).

Set environment variables

export ODBCINI=~/.odbc.ini export MAPRDRILLINI=~/.mapr.drillodbc.ini export LD_LIBRARY_PATH=/opt/mapr/drillodbc/lib/64Set up ODBC DSN in

~/.odbc.ini[ODBC Data Sources] DrillDSN=MapR Drill ODBC Driver 64-bit [DrillDSN] Driver=/opt/mapr/drillodbc/lib/64/libmaprdrillodbc64.so Description=Drill ODBC Driver ConnectionType=Direct HOST=localhost PORT=31010 AuthenticationType=No AuthenticationCopy the default ODBC driver manager config

cp /opt/mapr/drillodbc/Setup/odbcinst.ini ~/.odbcinst.iniConfigure the Drill ODBC driver in

~/.mapr.drillodbc.iniHere I've set the log level to Trace, so that we can see what's going on in depth—in practice this would generate huge amounts of unnecessary log data so set it to a lower value (e.g. 0) for actual use.

[Driver] DisableAsync=0 DriverManagerEncoding=UTF-32 ErrorMessagesPath=/opt/mapr/drillodbc/ErrorMessages LogLevel=6 LogPath=/tmp/odbc.log SwapFilePath=/tmp ODBCInstLib=libiodbcinst.so.2 # This is the ODBC Driver Manager library # Note the documentation - the DriverManagerEncoding (above) will vary depending # on the driver manager in use. # See https://drill.apache.org/docs/configuring-odbc-on-linux/#configuring-.mapr.drillodbc.ini

Having set this up, we'll now test it:

[oracle@demo ~]$ iodbctest "DSN=DrillDSN"

You should see:

iODBC Demonstration program

This program shows an interactive SQL processor

Driver Manager: 03.52.0709.0909

Driver: 1.2.1.1001 (MapR Drill ODBC Driver)

SQL>

From where you can enter a command (don't include the ; suffix as this is added automatically)

SQL> SELECT version FROM sys.version

version

--------

1.7.0

result set 1 returned 1 rows.

- If you get

Unable to locate SQLGetPrivateProfileString functionthen check that yourLD_LIBRARY_PATHincludes the location of the Driver Manager (libiodbc.so.2), and that the exact library specified byODBCInstLibexists—in my installation it was calledlibiodbcinst.so.2rather thanlibiodbc.soas shown in the docs. Also check that you've setMAPRDRILLINIenvironment variable.libiodbcinstalls into/usr/lib64which is one of the default paths checked for library files, hence not including it explicitly in theLD_LIBRARY_PATHenvironment variable.

- If you just get

Have a nice day.with no error but noSQL>prompt, check your ODBC DSN configuration. I hit this issue when inadvertently omitting theAuthenticationTypeparameter.

If you head over to /tmp/odbc.log/ you should see a file called driver.log with a bunch of trace data in it. Here's the data from the above session, excluding TRACE logs:

Aug 09 06:23:35.701 INFO 1148090112 Driver::LogVersions: SDK Version: 09.05.07.1008

Aug 09 06:23:35.701 INFO 1148090112 Driver::LogVersions: DSII Version: 1.2.1.1001

Aug 09 06:23:35.701 INFO 1148090112 Driver::Initialize: Database CHAR Encoding: UTF-8

Aug 09 06:23:35.701 INFO 1148090112 Driver::Initialize: Database WCHAR Encoding: UTF-8

Aug 09 06:23:35.701 INFO 1148090112 Driver::Initialize: ANSI CHAR Encoding: UTF-8

Aug 09 06:23:35.701 INFO 1148090112 Driver::Initialize: Driver Manager WCHAR Encoding: UTF-32LE

Aug 09 06:23:35.702 INFO 1148090112 Driver::Initialize: Detected Driver Manager: iODBC, 3.52.7

Aug 09 06:23:35.702 INFO 1148090112 Driver::Initialize: Locale name: en_GB

Aug 09 06:23:35.702 INFO 1148090112 Driver::Initialize: Bitness: 64-bit

Aug 09 06:23:35.702 INFO 1148090112 CInterface::SQLAllocHandle: Allocating environment handle.

Aug 09 06:23:35.702 INFO 1148090112 Environment::SQLSetEnvAttr: Attribute: SQL_ATTR_ODBC_VERSION (200)

Aug 09 06:23:35.702 INFO 1148090112 EnvironmentAttributes::SetAttribute: Setting ODBC version to: 3

Aug 09 06:23:35.702 INFO 1148090112 CInterface::SQLAllocHandle: Allocating connection handle.

Aug 09 06:23:35.702 INFO 1148090112 Connection::SQLSetConnectAttr: Attribute: Unknown Attribute (1051)

Aug 09 06:23:35.702 INFO 1148090112 ConnectionAttributes::SetAttribute: Invalid attribute: 1051

Aug 09 06:23:35.704 ERROR 1148090112 Connection::SQLSetConnectAttr: [MapR][ODBC] (10210) Attribute identifier invalid or not supported: 1051

Aug 09 06:23:35.708 INFO 1148090112 DiracClient::DiracClient: Create a new Dirac Client [194bd90] (handshakeTimeout = 5, queryTimeout = 180)

Aug 09 06:23:35.708 INFO 1148090112 DiracClient::connect: Connection String 'local=localhost:31010' & Default Schema ''

Aug 09 06:23:35.712 DEBUG 1148090112 DiracClient::connect: Connection successfully.

Aug 09 06:23:35.713 INFO 1148090112 Connection::SQLGetInfoW: InfoType: SQL_CURSOR_COMMIT_BEHAVIOR (23)

Aug 09 06:23:35.713 INFO 1148090112 Connection::SQLGetInfoW: InfoType: SQL_CURSOR_ROLLBACK_BEHAVIOR (24)

Aug 09 06:23:35.713 INFO 1148090112 Connection::SQLGetInfoW: InfoType: SQL_DRIVER_VER (7)

Aug 09 06:23:35.713 INFO 1148090112 Connection::SQLGetInfoW: InfoType: SQL_DRIVER_NAME (6)

Aug 09 06:23:35.713 INFO 1148090112 CInterface::SQLAllocHandle: Allocating statement handle.

Aug 09 06:23:35.714 INFO 1148090112 Statement::SQLGetStmtAttrW: Attribute: SQL_ATTR_APP_ROW_DESC (10010)

Aug 09 06:23:35.714 INFO 1148090112 Statement::SQLGetStmtAttrW: Attribute: SQL_ATTR_APP_PARAM_DESC (10011)

Aug 09 06:23:35.714 INFO 1148090112 Statement::SQLGetStmtAttrW: Attribute: SQL_ATTR_IMP_ROW_DESC (10012)

Aug 09 06:23:35.714 INFO 1148090112 Statement::SQLGetStmtAttrW: Attribute: SQL_ATTR_IMP_PARAM_DESC (10013)

Aug 09 06:25:39.409 INFO 1148090112 StatementState::InternalPrepare: Preparing query: SELECT version FROM sys.version

Aug 09 06:25:39.412 INFO 1148090112 DiracClient::ExecuteStatementDirect: DRResultSet [19494c0] executes query 'SELECT * FROM (SELECT version FROM sys.version) T LIMIT 0'

Aug 09 06:25:39.412 DEBUG 1148090112 DRResultSet::GetNextRecordBatch: START DEQUEUE. Current ResultQueue Size = 0

Aug 09 06:25:40.219 DEBUG 979547904 DiracClient::QueryResultsListener: DRResultSet [19494c0]: Handle RecordBatch [34001c40], DrillClientError [0]'

Aug 09 06:25:40.219 DEBUG 1148090112 DRResultSet::GetNextRecordBatch: END DEQUEUE. Get RecordBatch 34001c40 [m_recordCount = 0 ]. Now ResultQueue Size = 0

Aug 09 06:25:40.219 DEBUG 1148090112 DRResultSet::InitializeColumns: Column 1 version: {DataMode: OPTIONAL, MinorType: VARCHAR, Precision = 1, Scale = 0}

Aug 09 06:25:40.219 DEBUG 1148090112 DRResultSet::GetNextRecordBatch: START DEQUEUE. Current ResultQueue Size = 0

Aug 09 06:25:40.276 DEBUG 979547904 DiracClient::QueryResultsListener: DRResultSet [19494c0]: Handle RecordBatch [0], DrillClientError [0]'

Aug 09 06:25:40.276 DEBUG 1148090112 DRResultSet::GetNextRecordBatch: status = QRY_COMPLETED.

Aug 09 06:25:40.276 INFO 1148090112 DiracClient::CloseStatement: Close statement and free query resources

Aug 09 06:25:40.276 INFO 1148090112 DiracClient::ExecuteStatementDirect: DRResultSet [19494c0] executes query 'SELECT version FROM sys.version'

Aug 09 06:25:40.277 DEBUG 1148090112 DRResultSet::GetNextRecordBatch: START DEQUEUE. Current ResultQueue Size = 0

Aug 09 06:25:40.748 DEBUG 979547904 DiracClient::QueryResultsListener: DRResultSet [19494c0]: Handle RecordBatch [3400cb60], DrillClientError [0]'

Aug 09 06:25:40.750 DEBUG 1148090112 DRResultSet::GetNextRecordBatch: END DEQUEUE. Get RecordBatch 3400cb60 [m_recordCount = 1 ]. Now ResultQueue Size = 0

Aug 09 06:25:40.750 DEBUG 1148090112 DRResultSet::InitializeColumns: Column 1 version: {DataMode: OPTIONAL, MinorType: VARCHAR, Precision = 1, Scale = 0}

Aug 09 06:25:40.751 DEBUG 1148090112 DRResultSet::GetNextRecordBatch: START DEQUEUE. Current ResultQueue Size = 0

Aug 09 06:25:40.985 DEBUG 979547904 DiracClient::QueryResultsListener: DRResultSet [19494c0]: Handle RecordBatch [0], DrillClientError [0]'

Aug 09 06:25:40.985 DEBUG 1148090112 DRResultSet::GetNextRecordBatch: status = QRY_COMPLETED.

Aug 09 06:25:40.986 INFO 1148090112 DiracClient::CloseStatement: Close statement and free query resources

Aug 09 06:25:40.991 ERROR 1148090112 Statement::SQLCloseCursor: [MapR][ODBC] (10510) Invalid cursor state.

Aug 09 06:27:19.748 ERROR 1148090112 Statement::SQLCloseCursor: [MapR][ODBC] (10510) Invalid cursor state.

Aug 09 06:27:19.748 INFO 1148090112 CInterface::SQLFreeHandle: Freeing statement handle.

Aug 09 06:27:19.748 INFO 1148090112 DiracClient::~DiracClient: Close the Dirac Client [194bd90]

Aug 09 06:27:19.748 INFO 1148090112 CInterface::SQLFreeHandle: Freeing connection handle.

Aug 09 06:27:19.749 INFO 1148090112 CInterface::SQLFreeHandle: Freeing environment handle.

Hooking it up to OBIEE

We've shown that we can connect to Apache Drill using ODBC and query it. Let's see if we get it to work for OBIEE. Our starting point is the 12c docs, Configuring Database Connections Using Native ODBC Drivers.

Environment variables are defined per-component in a

.propertiesfile, which for the BI Server (OBIS / nqsserver) isBI_DOMAIN/config/fmwconfig/bienv/obis/obis.properties, so on SampleApp/app/oracle/biee/user_projects/domains/bi/config/fmwconfig/bienv/OBIS/obis.properties. To this file (which in 12.2.1 is empty by default) we add:MAPRDRILLINI=/home/oracle/.mapr.drillodbc.ini LD_LIBRARY_PATH=/opt/mapr/drillodbc/lib/64Add the Drill DSN to

odbc.iniwhich for OBIEE already exists and is populated with other ODBC configurations. You'll find the file inBI_DOMAIN/config/fmwconfig/bienv/core, which on SampleApp is/app/oracle/biee/user_projects/domains/bi/config/fmwconfig/bienv/core/odbc.ini.Add to the

[ODBC Data Sources]sectionDrillDSN=MapR Drill ODBC Driver 64-bitAdd a section to the bottom of the file:

[DrillDSN] Driver=/opt/mapr/drillodbc/lib/64/libmaprdrillodbc64.so AuthenticationType=No Authentication Description=Drill ODBC Driver ConnectionType=Direct HOST=localhost PORT=31010

Now restart the BI Server:

/app/oracle/biee/user_projects/domains/bi/bitools/bin/stop.sh -i obis1 && /app/oracle/biee/user_projects/domains/bi/bitools/bin/start.sh -i obis1

We can check that the BI Server has picked up our new environment variables by using the /proc pseudo file-system (you can also see an environment variable dump as part of the obis1.out logfile during startup):

[oracle@demo ~]$ strings /proc/$(pgrep nqsserver)/environ|grep mapr

LD_LIBRARY_PATH=/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib:/app/oracle/biee/bi/bifoundation/server/bin:/app/oracle/biee/bi/bifoundation/web/bin:/app/oracle/biee/bi/clients/Essbase/EssbaseRTC/bin:/app/oracle/biee/bi/lib:/app/oracle/biee/lib:/app/oracle/biee/oracle_common/adr:/app/oracle/biee/oracle_common/lib:/usr/lib:/lib:/opt/mapr/drillodbc/lib/64:::/app/oracle/app/oracle/product/12.1.0/dbhome_1/lib:/app/oracle/endeca/olt/bin:/app/oracle/biee/wlserver/server/native/linux/x86_64:/app/oracle/biee/wlserver/server/native/linux/x86_64/oci920_8:/app/oracle/biee/wlserver/server/native/linux/x86_64:/app/oracle/biee/wlserver/server/native/linux/x86_64/oci920_8

MAPRDRILLINI=/home/oracle/.mapr.drillodbc.ini

Over in the Administration Tool I set up a new Database (type: ODBC Basic) and associated connection pool (ODBC 2.0) pointing to DrillDSN.

Now to try it out! In Answers I build a Direct Database Request:

But...oh no!

Odbc driver returned an error (SQLExecDirectW).

State: HY000. Code: 10058. [NQODBC] [SQL_STATE: HY000] [nQSError: 10058] A general error has occurred. (HY000)

State: HY000. Code: 12010. [nQSError: 12010] Communication error connecting to remote end point: address = demo.us.oracle.com; port = 7792. (HY000)

SQL Issued: {call NQSGetQueryColumnInfo('EXECUTE PHYSICAL CONNECTION POOL "Apache Drill"."Apache Drill" SELECT version FROM sys.version;')}

Let's pick through this, because it's a common error and easily misinterpreted.

Odbc driver returned an error (SQLExecDirectW).When you run an analysis/dashboard, Presentation Services (OBIPS/sawserver) connects to the BI Server (OBIS/nqsserver) to send the request as Logical SQL, and it connects to the BI Server using ODBC. Therefore any kind of issue running the request will always show as an "ODBC error".

Learning: nothing, other than that Presentation Services hit an error.

State: HY000. Code: 10058. [NQODBC] [SQL_STATE: HY000] [nQSError: 10058] A general error has occurred. (HY000)- Learning: nothing. Something went wrong, somewhere, and OBIEE was involved (

nQSError).

- Learning: nothing. Something went wrong, somewhere, and OBIEE was involved (

State: HY000. Code: 12010. [nQSError: 12010] Communication error connecting to remote end point: address = demo.us.oracle.com; port = 7792. (HY000)- Now we're getting somewhere. Port 7792 is, on this server, the BI Server (OBIS / nqsserver). And, there was an error connecting to it.

- Learning: We failed to successfully connect to the BI Server. Is it running? Was it running but crashed? Is there a network problem? Lots to investigate.

SQL Issued: {call NQSGetQueryColumnInfo('EXECUTE PHYSICAL CONNECTION POOL "Apache Drill"."Apache Drill" SELECT version FROM sys.version;')}- Learning: This is the logical SQL that Presentation Services was trying to run. Useful to know.

Let's go and have a look at the BI Server log, because something's evidently not right. We know that it was running, because otherwise we'd not have been able to login to OBIEE in the first place.

In /app/oracle/biee/user_projects/domains/bi/servers/obis1/logs/obis1.out there's some bad news:

<NodeManager ComponentManager> <The server 'obis1' with process id 7059 is no longer alive; waiting for the process to die.>

<NodeManager ComponentManager> <Process died.>

<NodeManager ComponentManager> <get latest startup configuration before deciding/trying to restart the server>

<NodeManager ComponentManager> <Server failed so attempting to restart (restart count = 1)>

So it looks like the BI Server crashed. In the folder above where obis1.out is kept, there's a corresponding crash report (note that the process ID matches the log message above), nqsserver_7059_crashreport.txt. Some bits of interest from it:

[...]

Beginning of crash dump...

Signal: 11

================================================================

[...]

Activity type: ExecutePhysical GatewayDbGateway Prepare

DSN:Apache Drill

User Name:weblogic

SQL:SELECT version FROM sys.version;

================================================================

Activity #2 ECID: 005EPt5qYm2Fw000jzwkno0001iJ0001KT,0

================================================================

Activity type: Producer Executing Query

Repository Name:ssi;Subject Area Name:;User Name:weblogic

Logical Hash of SQL: 0x3d5c4ef2SQL:{call NQSGetQueryColumnInfo('EXECUTE PHYSICAL CONNECTION POOL "Apache Drill"."Apache Drill" SELECT version FROM sys.version;')}

[...]

BACKTRACE:

/app/oracle/biee/bi/bifoundation/server/bin/libnqutilityserver64.so(+0x12a829)[0x7f554995b829]

/lib64/libpthread.so.0(+0xf790)[0x7f5547cde790]

/lib64/libc.so.6(cfree+0x1c)[0x7f554721693c]

/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/libodbc.so(+0xa5c55)[0x7f550f2efc55]

/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/libodbc.so(+0xa4fc0)[0x7f550f2eefc0]

/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/libodbc.so(+0xa53f0)[0x7f550f2ef3f0]

/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/libodbc.so(SQLDriverConnect+0x149)[0x7f550f2d8337]

/app/oracle/biee/bi/bifoundation/server/bin/libnqsdbgatewayodbc64.so(_ZN8NQSQLAPI18NQSQLDriverConnectEPvS0_RKN3sup6StringIwN7_SASSTL9allocatorIwEEEES8_S8_tbS8_+0x1c8)[0x7f54abfb5078]

/app/oracle/biee/bi/bifoundation/server/bin/libnqsdbgatewayodbc64.so(_ZN24OdbcDataSourceConnection7ConnectERK6SignonbjN10ServerInfo17TxnIsolationLevelEi+0x275)[0x7f54abfcbb45]

/app/oracle/biee/bi/bifoundation/server/bin/libnqutilityserver64.so(_ZN20DataSourceConnection7ConnectERK6SignonbRK14GatewayOptions+0x79)[0x7f55498d6819]

/app/oracle/biee/bi/bifoundation/server/bin/libnqsdbconnection64.so(_ZN12DbConnection9FixDamageERK14GatewayOptions+0x7c)[0x7f55524e12cc]

/app/oracle/biee/bi/bifoundation/server/bin/libnqsdbconnection64.so(_ZN20SingleThreadedDbPool22GetAvailableConnectionERK6SignonR17DbConnectionGuardRbRK14GatewayOptions+0x45e)[0x7f555250513e]

/app/oracle/biee/bi/bifoundation/server/bin/libnqsdbgateway64.so(_ZN11NQDbGateway7PrepareEv+0xb14)[0x7f5552294fa4]

/app/oracle/biee/bi/bifoundation/server/bin/libnqsexecutionlist64.so(_ZN8Producer11ExecuteOnceEv+0xb4c)[0x7f555146192c]

/app/oracle/biee/bi/bifoundation/server/bin/libnqutilitygeneric64.so(_ZN16NQExecutionState17ExecuteSystemMainEv+0x82)[0x7f5549c92032]

/app/oracle/biee/bi/bifoundation/server/bin/libnqutilitygeneric64.so(_ZN15NQThreadJobBase17ExecuteSystemMainEv+0x51)[0x7f5549cd9dd1]

/app/oracle/biee/bi/bifoundation/server/bin/libnqsdbconnection64.so(_ZN18NQThreadServerBaseI17ManagedJobFunctorE11ScheduleJobEv+0x40)[0x7f5552509650]

/app/oracle/biee/bi/bifoundation/server/bin/libnqutilitygeneric64.so(_ZN19NQThreadServerNoJob15ExecuteUserMainEv+0x16)[0x7f5549cda256]

/app/oracle/biee/bi/bifoundation/server/bin/libnqutilitygeneric64.so(_ZN16NQExecutionState17ExecuteSystemMainEv+0x82)[0x7f5549c92032]

/app/oracle/biee/bi/bifoundation/server/bin/libnqutilitygeneric64.so(_ZN8NQThread17ExecuteSystemMainEv+0x5b)[0x7f5549cd897b]

/app/oracle/biee/bi/bifoundation/server/bin/libnqutilitygeneric64.so(_ZN8NQThread15ThreadMainEntryEPv+0x2e)[0x7f5549cd8c9e]

/lib64/libpthread.so.0(+0x7a51)[0x7f5547cd6a51]

/lib64/libc.so.6(clone+0x6d)[0x7f554728393d]

Signal 11 is "SIGSEGV", or segmentation fault, which is bad, m'kay?

Tracing OBIEE's ODBC Connectivity with strace

Looking in at the ODBC driver.log we can see that something happened, but no error logged. Here's everything, minus TRACE logs:

Aug 09 07:13:43.283 INFO 2896611072 Driver::LogVersions: SDK Version: 09.05.07.1008

Aug 09 07:13:43.288 INFO 2896611072 Driver::LogVersions: DSII Version: 1.2.1.1001

Aug 09 07:13:43.288 INFO 2896611072 Driver::Initialize: Database CHAR Encoding: UTF-8

Aug 09 07:13:43.288 INFO 2896611072 Driver::Initialize: Database WCHAR Encoding: UTF-8

Aug 09 07:13:43.288 INFO 2896611072 Driver::Initialize: ANSI CHAR Encoding: UTF-8

Aug 09 07:13:43.288 INFO 2896611072 Driver::Initialize: Driver Manager WCHAR Encoding: UTF-32LE

Aug 09 07:13:43.289 INFO 2896611072 Driver::Initialize: Detected Driver Manager: DataDirect Driver Manager, 07.14.0119 (U0115)

Aug 09 07:13:43.289 INFO 2896611072 Driver::Initialize: Locale name: en_US

Aug 09 07:13:43.289 INFO 2896611072 Driver::Initialize: Bitness: 64-bit

Aug 09 07:13:43.290 INFO 2896611072 CInterface::SQLAllocHandle: Allocating environment handle.

Aug 09 07:13:43.293 INFO 2896611072 CInterface::SQLAllocHandle: Allocating connection handle.

Aug 09 07:13:43.298 ERROR 2896611072 CInterface::SQLGetConnectAttr: [MapR][ODBC] (10210) Attribute identifier invalid or not supported: 1063

Aug 09 07:13:43.300 INFO 2896611072 CInterface::SQLFreeHandle: Freeing connection handle.

Aug 09 07:13:43.301 INFO 2896611072 CInterface::SQLFreeHandle: Freeing environment handle.

Aug 09 07:13:43.312 INFO 2896611072 CInterface::SQLAllocHandle: Allocating environment handle.

Aug 09 07:13:43.315 INFO 2896611072 Environment::SQLSetEnvAttr: Attribute: SQL_ATTR_ODBC_VERSION (200)

Aug 09 07:13:43.315 INFO 2896611072 EnvironmentAttributes::SetAttribute: Setting ODBC version to: 2

Aug 09 07:13:43.315 INFO 2896611072 CInterface::SQLAllocHandle: Allocating connection handle.

Aug 09 07:13:43.317 INFO 2896611072 Environment::SQLGetEnvAttr: Attribute: Unknown Attribute (1065)

Aug 09 07:13:43.317 INFO 2896611072 Connection::SQLGetInfoW: InfoType: SQL_DRIVER_ODBC_VER (77)

One thing that stands out (with the benefit of hindsight because I've been hacking away at this for a while) is

Driver::Initialize: Detected Driver Manager: DataDirect Driver Manager, 07.14.0119 (U0115)

Whereas in my successful standalone test above it was

Driver::Initialize: Detected Driver Manager: iODBC, 3.52.7

So now let's do some digging. Using strace (which I've written about previously) we can see every system call that OBIEE makes, letting us audit the files that it reads and libraries that it loads. You can invoke strace at process startup or, easier, attach it to a running one. Since the BI Server also creates child processes, and also may crash (as here), we'll use a loop to make sure we've always got a strace running against it until we opt to cancel it:

while [ 1 -eq 1 ]; do strace -f -p $(pgrep nqsserver) -tt -y -s 4096 -o ~/nqs_trace_$(date +%Y%m%dT%H%M%S%N).out;done

If the BI Server isn't running, you'll just see

strace: Invalid process id: '-tt'

But once it starts, you'll get

Process 8457 attached

The output is written to the home folder, in files named nqs_trace_ followed by the timestamp at which the trace started. To cancel it, hit Ctrl-C several times to break out of strace and the while loop. If you leave this running for a long period of time you'll likely fill the filesystem and possibly make the server unusable…

Using your text editor of choice, you can search through the strace output to try and pick apart what's happening. Even if you don't find the cause of the error, you can at least rule other causes out this way. For example, whether a configuration file that you think is being used actually is, or where a library is actually being read from.

First up in searching the trace for odbc is the BI Server's own ODBC gateway library being opened and read:

7222 07:13:43.080212 open("/app/oracle/biee/bi/bifoundation/server/bin/libnqsdbgatewayodbc64.so", O_RDONLY) = 31

Next it checks for the odbc.ini configuration file and gets a success return code (0), but at this point it doesn't read it

8382 07:13:43.097901 stat("/app/oracle/biee/user_projects/domains/bi/config/fmwconfig/bienv/core/odbc.ini", {st_mode=S_IFREG|0640, st_size=2613, ...}) = 0

and then looks for the ODBC trace library, and this is interesting because it can't find it. One of the very useful things with strace is to be able to see all the paths that a program tries to read for a given file. Here you see a selection of the paths it's trying, and the failure (-1 ENOENT (No such file or directory)) each time:

8382 07:13:43.098135 stat("/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/odbctrac.so", 0x7f54aca68b90) = -1 ENOENT (No such file or directory)

8382 07:13:43.098190 stat("/usr/local/lib//app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/odbctrac.so", 0x7f54aca68b90) = -1 ENOENT (No such file or directory)

8382 07:13:43.098230 stat("/usr/local/lib/..//app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/odbctrac.so", 0x7f54aca68b90) = -1 ENOENT (No such file or directory)

8382 07:13:43.098274 stat("/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib//app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/odbctrac.so", 0x7f54aca68b90) = -1 ENOENT (No such file or directory)

8382 07:13:43.098312 stat("/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/..//app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/odbctrac.so", 0x7f54aca68b90) = -1 ENOENT (No such file or directory)

We can use one of my favourite linux utilities, locate, to find where this file actually is:

[oracle@demo ~]$ locate odbctrac.so

/app/oracle/biee/bi/bifoundation/odbc/lib/odbctrac.so

/app/oracle/biee/bi/common/ODBC/Merant/5.3/lib/odbctrac.so

You can install this on your system with sudo yum install -y mlocate, and if you've recently changed files (e.g. installed a new package) refresh the database with sudo updatedb before running locate.

This is one interesting point of note here, then, that the ODBC trace library isn't loading properly. Let's note this for now, and carry on through the trace file. Next up it opens and reads the odbc.ini file:

8382 07:13:43.102664 open("/app/oracle/biee/user_projects/domains/bi/config/fmwconfig/bienv/core/odbc.ini", O_RDONLY) = 31

8382 07:13:43.102786 read(31</app/oracle/biee/user_projects/domains/bi/config/fmwconfig/bienv/core/odbc.ini>, "[ODBC]nTrace=1nTraceFile=/app/oracle/biee/user_projects/domains/bi/odbctrace.outnTraceDll=/app/oracle/biee/bi/common/ODBC/M [...]

Now we get into the meat of it. The Apache Drill ODBC driver gets checked that it exists:

8382 07:13:43.109475 stat("/opt/mapr/drillodbc/lib/64/libmaprdrillodbc64.so", {st_mode=S_IFREG|0645, st_size=38794216, ...}) = 0

and then opened and read. Next OBIEE tries to read the libdrillClient.so library, starting here:

8382 07:13:43.113071 open("/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

Note that this fails (-1 ENOENT), and it works it's way through other paths until it succeeds:

8382 07:13:43.113071 open("/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113189 open("/app/oracle/biee/bi/bifoundation/server/bin/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113238 open("/app/oracle/biee/bi/bifoundation/web/bin/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113274 open("/app/oracle/biee/bi/clients/Essbase/EssbaseRTC/bin/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113309 open("/app/oracle/biee/bi/lib/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113341 open("/app/oracle/biee/lib/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113374 open("/app/oracle/biee/oracle_common/adr/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113408 open("/app/oracle/biee/oracle_common/lib/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113440 open("/usr/lib/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113484 open("/lib/libdrillClient.so", O_RDONLY) = -1 ENOENT (No such file or directory)

8382 07:13:43.113517 open("/opt/mapr/drillodbc/lib/64/libdrillClient.so", O_RDONLY) = 31

Where do these paths come from? LD_LIBRARY_PATH! And we can confirm that by comparing the above sequence of folders with the value of LD_LIBRARY_PATH that we saw above, split out onto lines here with a bit of sed magic:

[oracle@demo ~]$ strings /proc/$(pgrep nqsserver)/environ|grep LD_LIBRARY_PATH|sed 's/:/n/g'|sed 's/=/n/g'

LD_LIBRARY_PATH

/app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib

/app/oracle/biee/bi/bifoundation/server/bin

/app/oracle/biee/bi/bifoundation/web/bin

/app/oracle/biee/bi/clients/Essbase/EssbaseRTC/bin

/app/oracle/biee/bi/lib

/app/oracle/biee/lib

/app/oracle/biee/oracle_common/adr

/app/oracle/biee/oracle_common/lib

/usr/lib

/lib

/opt/mapr/drillodbc/lib/64

So...we've read the odbc.ini config file, loaded the Apache Drill ODBC driver (libmaprdrillodbc64.so) and associated library (libdrillClient.so). Now a further library is opened and read:

8382 07:13:43.271710 open("/opt/mapr/drillodbc/lib/64/SimbaDrillODBC.did", O_RDONLY) = 31

and then the Apache Drill ODBC driver configuration file that we created earlier (and referenced in the MAPRDRILLINI environment variable) is opened and read:

8382 07:13:43.281356 open("/home/oracle/.mapr.drillodbc.ini", O_RDONLY) = 31

Because we've enabled logging in the driver configuration, what happens next in the strace is that log file being created and written to:

8382 07:13:43.283527 mkdir("/tmp/odbc.log/", 0755) = -1 EEXIST (File exists)

8382 07:13:43.283834 open("/tmp/odbc.log/driver.log", O_WRONLY|O_CREAT|O_APPEND, 0666) = 31

8382 07:13:43.284037 fstat(31</tmp/odbc.log/driver.log>, {st_mode=S_IFREG|0640, st_size=174018, ...}) = 0

8382 07:13:43.284220 mmap(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7f54ad460000

8382 07:13:43.284338 fstat(31</tmp/odbc.log/driver.log>, {st_mode=S_IFREG|0640, st_size=174018, ...}) = 0

8382 07:13:43.284389 lseek(31</tmp/odbc.log/driver.log>, 174018, SEEK_SET) = 174018

8382 07:13:43.284438 write(31</tmp/odbc.log/driver.log>, "Aug 09 07:13:43.283 INFO 2896611072 Driver::LogVersions: SDK Version: 09.05.07.1008n", 85) = 85

[...]

After a bunch of trace logging is written, the ODBC messages translation file is read:

8382 07:13:43.290888 read(34</opt/mapr/drillodbc/ErrorMessages/en-US/ODBCMessages.xml>, "<?xml version="1.0" encoding="utf-8"?>n<!DOCTYPE Messages [n <!ELEMENT Messages (Package*)>nn <!ELEMENT Package (Error*)>n <!ATTLI

The next file read is odbc.ini again

8382 07:13:43.304659 read(35</app/oracle/biee/user_projects/domains/bi/config/fmwconfig/bienv/core/odbc.ini>, "", 4096) = 0

followed by odbc.ini in the default /etc/ path (which exists but is zero bytes):

8382 07:13:43.306727 open("/etc/odbc.ini", O_RDONLY) = 35

The odbcinst.ini file is then read, from /etc/odbcinst.ini (which is created by unixODBC on installation, and has entries for non-existant mysql/postgresql drivers):

8382 07:13:43.307417 read(35</etc/odbcinst.ini>, "# Example driver definitionsnn# Driver from the postgresql-odbc packagen# Setup from the unixODBC packagen[PostgreSQL]nDescriptiont= ODBC for PostgreSQLnDrivertt= /usr/lib/psqlod

and then the version for Drill:

8382 07:13:43.308040 read(35</home/oracle/.odbcinst.ini>, "[ODBC Drivers]nMapR Drill ODBC Driver 64-bit=Installednn[MapR Drill ODBC Driver 64-bit]nDescription=MapR Drill ODBC Driver(64-bit)nDriver=/opt/mapr/drillodbc/lib/64/libmaprd

Back to the odbc.ini file, both OBIEE and default (empty) system:

8382 07:13:43.309065 open("/app/oracle/biee/user_projects/domains/bi/config/fmwconfig/bienv/core/odbc.ini", O_RDONLY) = 35

8382 07:13:43.309619 read(35</etc/odbc.ini>, "", 4096) = 0

After another read of the two odbcinst.ini files (per above), there's logging information written

8382 07:13:43.312345 write(31</tmp/odbc.log/driver.log>, "Aug 09 07:13:43.312 INFO 2896611072 CInterface::SQLAllocHandle: Allocating environment handle.n", 96) = 96

[...]

8382 07:13:43.317106 write(31</tmp/odbc.log/driver.log>, "Aug 09 07:13:43.317 INFO 2896611072 Connection::SQLGetInfoW: InfoType: SQL_DRIVER_ODBC_VER (77)n", 97) = 97

8382 07:13:43.317141 stat("/tmp/odbc.log/driver.log", {st_mode=S_IFREG|0640, st_size=179550, ...}) = 0

And then, bang.

8382 07:13:43.317198 --- SIGSEGV {si_signo=SIGSEGV, si_code=SEGV_MAPERR, si_addr=0x1f} ---

After which, the crashreport is written:

8382 07:13:43.317249 open("/app/oracle/biee/user_projects/domains/bi/servers/obis1/nqsserver_7059_crashreport.txt", O_WRONLY|O_CREAT|O_APPEND, 0600) = 35

[...]

So from this we can see that the ODBC driver is being loaded from the path we expected, and the configuration files that we expected are being read too. So no smoking gun - but a couple of possible problems ruled out.

Reproducing the Crash on Demand

With this kind of problem it's often a case of working through lots of different configuration settings to obtain further diagnostic evidence - which requires reproducing the problem each time. Instead of manually running the query through Answers which triggers the problem, I moved this part of the diagnostics to the command line, with nqcmd. I copied the logical SQL to a file drill-test.lsql:

EXECUTE PHYSICAL CONNECTION POOL "Apache Drill"."Apache Drill" SELECT version FROM sys.version;

and then run with:

/app/oracle/biee/user_projects/domains/bi/bitools/bin/nqcmd.sh -d AnalyticsWeb -u weblogic -p Admin123 -s ~/drill-test.lsql

gives the same result (error) but easier to do.

Aug 09, 2016 7:59:53 PM oracle.bi.endpointmanager.impl.bienv.BIEnvComponents loadFromInputStream

INFO: Reading components from: /app/oracle/biee/user_projects/domains/bi/config/fmwconfig/bienv/core/bienv-components.xml ...

-------------------------------------------------------------------------------

Oracle BI ODBC Client

Copyright (c) 1997-2015 Oracle Corporation, All rights reserved

-------------------------------------------------------------------------------

EXECUTE PHYSICAL CONNECTION POOL "Apache Drill"."Apache Drill" SELECT version FROM sys.version

EXECUTE PHYSICAL CONNECTION POOL "Apache Drill"."Apache Drill" SELECT version FROM sys.version

[unixODBC][nQSError: 12010] Communication error connecting to remote end point: address = demo.us.oracle.com; port = 7792.[unixODBC][NQODBC] [SQL_STATE: S1000] [nQSError: 10058] A general error has occurred.

Statement preparation failed

Processed: 1 queries

Encountered 1 errors

Stuff Ruled Out

The

odbcinst.inifile doesn't have to be present - strace showed it is looked for, but the result is the same even if it's not (or is but doesn't have an entry for the Drill drivers):4270 20:00:06.692891 open("/home/oracle/.odbcinst.ini", O_RDONLY) = -1 ENOENT (No such file or directory)Changing the connection pool call interface to ODBC 3.5 doesn't fix things, nor does changing the database type to Apache Hadoop (grasping at straws...)

Driver Manager

One of the routes of investigation is the Driver Manager. This is for two reasons; one is that on Windows ODBC is native, the driver manager is built in. As mentioned, @cfiston has got this to work - but on Windows. So platform differences aside (he says casually), this could be something. The second reason is the difference in the driver manager that shows in the ODBC log between a successful (via iodbctest) request

Driver::Initialize: Detected Driver Manager: iODBC, 3.52.7

versus an unsuccessful one (in OBIEE):

Driver::Initialize: Detected Driver Manager: DataDirect Driver Manager, 07.14.0119 (U0115)

Before we dig into this further let's take a look at the driver manager libraries that we've got on the system. DataDirect and unixODBC are both libodbcinst.so whilst iODBC is libiodbc.so. We'll use a bit of bash magic to find all the files, and list their sizes and checksums so we can spot which are the same:

[oracle@demo odbc.log]$ locate libodbcinst|xargs -Ifoo ls -l foo

-rw-r----- 1 oracle oinstall 1380087 2015-10-13 03:04 /app/oracle/biee/bi/bifoundation/odbc/lib/libodbcinst.so

-rw-r----- 1 oracle oinstall 1380087 2015-10-03 02:59 /app/oracle/biee/bi/common/ODBC/Merant/5.3/lib/libodbcinst.so

-rw-r----- 1 oracle oinstall 1203032 2015-10-03 02:59 /app/oracle/biee/bi/common/ODBC/Merant/7.0.1/lib/libodbcinst.so

-rw-r----- 1 oracle oinstall 1239728 2015-10-03 02:59 /app/oracle/biee/bi/common/ODBC/Merant/7.1.2/lib/libodbcinst.so

-rw-r----- 1 oracle oinstall 1244304 2015-10-03 02:59 /app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/libodbcinst.so

lrwxrwxrwx 1 root root 20 2016-08-08 11:55 /usr/lib64/libodbcinst.so -> libodbcinst.so.2.0.0

lrwxrwxrwx 1 root root 20 2016-08-08 11:55 /usr/lib64/libodbcinst.so.2 -> libodbcinst.so.2.0.0

-rwxr-xr-x 1 root root 68928 2014-08-16 19:58 /usr/lib64/libodbcinst.so.2.0.0

[oracle@demo odbc.log]$ locate libodbcinst|xargs -Ifoo md5sum foo|sort

0bfd147ff6b41daee527861143040f1b /app/oracle/biee/bi/common/ODBC/Merant/7.1.2/lib/libodbcinst.so

7eaee346f92169fc2e2ba5900dceefa3 /app/oracle/biee/bi/common/ODBC/Merant/5.3/lib/libodbcinst.so

b340968ee0a2188427a66203fb0a56b7 /app/oracle/biee/bi/bifoundation/odbc/lib/libodbcinst.so

dadcb67d26d42b0c2535a9be44d2b46f /usr/lib64/libodbcinst.so

dadcb67d26d42b0c2535a9be44d2b46f /usr/lib64/libodbcinst.so.2

dadcb67d26d42b0c2535a9be44d2b46f /usr/lib64/libodbcinst.so.2.0.0

eccb81df3cdaaeb83faa86dfc6187844 /app/oracle/biee/bi/common/ODBC/Merant/7.1.4/lib/libodbcinst.so

ed27493fd52534e181e0e6cd29c6a48a /app/oracle/biee/bi/common/ODBC/Merant/7.0.1/lib/libodbcinst.so

[oracle@demo odbc.log]$ locate libiodbcinst|xargs -Ifoo ls -l foo

lrwxrwxrwx 1 root root 22 2016-08-08 11:24 /usr/lib64/libiodbcinst.so.2 -> libiodbcinst.so.2.1.19

-rwxr-xr-x 1 root root 72896 2010-06-23 11:07 /usr/lib64/libiodbcinst.so.2.1.19

[oracle@demo odbc.log]$ locate libiodbcinst|xargs -Ifoo md5sum foo|sort

44a432e25d176079cf30e805c648fc86 /usr/lib64/libiodbcinst.so.2

44a432e25d176079cf30e805c648fc86 /usr/lib64/libiodbcinst.so.2.1.19

What's interesting from this is that the libodbcinst.so installed within OBIEE (bifoundation/odbc/lib/) is the same size as the DataDirect 5.3 one, but a different checksum.

OBIEE uses ODBC for its own internal connectivity between Presentation Services (OBIPS) and BI Server (OBIS). We saw in the strace output above that odbctrac.so wasn't successfully loaded, so let's fix that in /app/oracle/biee/user_projects/domains/bi/config/fmwconfig/bienv/core/odbc.ini:

[ODBC]

Trace=1

TraceFile=/tmp/odbctrace.out

TraceDll=/app/oracle/biee/bi/bifoundation/odbc/lib/odbctrac.so

[...]

With this enabled there's now debug information written - although in this case it's about OBIEE using ODBC to connect to its internal MDS schemas (which in 12c it looks to use ODBC for):

ppid=24928:pid= 3bd8:754f8720 ENTER SQLConnect

HDBC 0x01385750

UCHAR * 0x012fbfb0 [ 15] "opss_datasource"

SWORD 15

UCHAR * 0x012fcba0 [ 9] "BIEE_OPSS"